can someone explain me why I have one clock delay on my simulation of the following and how can I fix it, it shouldnt be there cause I am missing a bit on the output....

entity outBit is

port( clk1 : in STD_LOGIC;

clk2 : in STD_LOGIC;

-- reset : in STD_LOGIC;

int_in : in INTEGER;

bit_out : out STD_LOGIC); --_VECTOR of 32

end outBit ;

Is my entity and every rising edge of clk 1 it takes an integer. According to what integer it is(1, 2, 3, 4...) it chooses the corresponding line of an array. That line is of 32 bits. I want to output one bit of the 32 each clk2. For example if clk1 = 100 then clk2 = 100/32.

architecture Behavioral of outBit is

signal temp : array; --the array is fixed

signal output_bits : std_logic_vector(31 downto 0);

signal bit_i : integer := 31; --outputting a single bit out of 32 each time

begin

temp(0) <= "11111111111111111111111111111111";

temp(1) <= "11111111111111111111111111111110";

temp(2) <= "11111111111111111111111111111100";

-- etc

output_bits <= temp(int_in);

process(clk2)

--outputting a single bit out of 32 each time

--variable bit_i : integer := 31;

begin

if rising_edge(clk2) then

bit_out <= output_bits(bit_i);

if bit_i = 0 then

bit_i <= 31;

else

bit_i <= bit_i - 1;

end if;

end if;

end process;

end Behavioral;

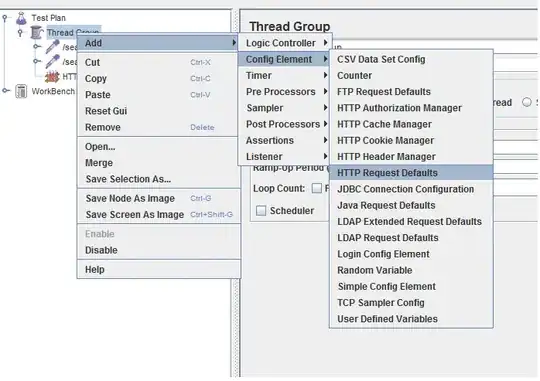

The unwanted delay is shown below. I would like each 32 cycles to read the new line (according to the input integer) and so on....

BY the way the firstclock (in code),(second clock in picture) is not really relative to the question is just to get the idea when the integer is coming