I use the gbm library in R and I would like to use all my CPU to fit a model.

gbm.fit(x, y,

offset = NULL,

misc = NULL,...

I use the gbm library in R and I would like to use all my CPU to fit a model.

gbm.fit(x, y,

offset = NULL,

misc = NULL,...

Well, there cannot be a parallel implementation of GBM in principle, neither in R neither nor in any other implementation. And the reason is very simple: the boosting algorithm is by definition sequential.

Consider the following, quoted from The Elements of Statistical Learning, Ch. 10 (Boosting and Additive Trees), pp. 337-339 (emphasis mine):

A weak classifier is one whose error rate is only slightly better than random guessing. The purpose of boosting is to sequentially apply the weak classification algorithm to repeatedly modified versions of the data, thereby producing a sequence of weak classifiers Gm(x), m = 1, 2, . . . , M. The predictions from all of them are then combined through a weighted majority vote to produce the final prediction. [...] Each successive classifier is thereby forced to concentrate on those training observations that are missed by previous ones in the sequence.

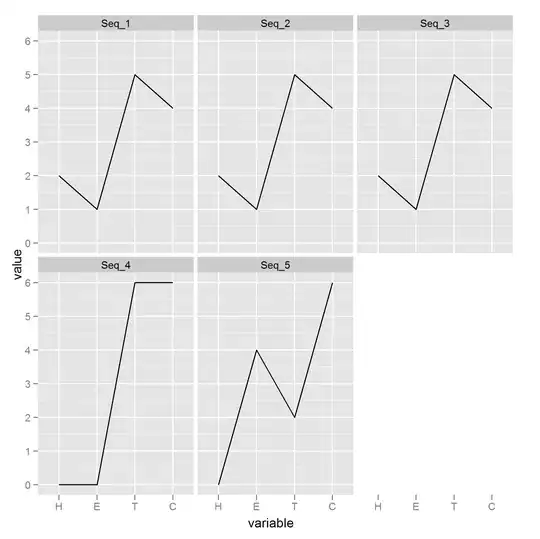

In a picture (ibid, p. 338):

In fact, this is frequently noted as a key disadvantage of GBM relative to, say, Random Forest (RF), where the trees are independent and can thus be fitted in parrallel (see the bigrf R package).

Hence, the best you can do, as the commenters above have pinpointed, is to use your excess CPU cores to parallelize the cross-validation process...

As far as I know, both h2o and xgboost have this.

For h2o, see e.g. this blog post of theirs from 2013 from which I quote

At 0xdata we build state-of-the-art distributed algorithms - and recently we embarked on building GBM, and algorithm notorious for being impossible to parallelize much less distribute. We built the algorithm shown in Elements of Statistical Learning II, Trevor Hastie, Robert Tibshirani, and Jerome Friedman on page 387 (shown at the bottom of this post). Most of the algorithm is straightforward “small” math, but step 2.b.ii says “Fit a regression tree to the targets….”, i.e. fit a regression tree in the middle of the inner loop, for targets that change with each outer loop. This is where we decided to distribute/parallelize.

The platform we build on is H2O, and as talked about in the prior blog has an API focused on doing big parallel vector operations - and for GBM (and also Random Forest) we need to do big parallel tree operations. But not really any tree operation; GBM (and RF) constantly build trees - and the work is always at the leaves of a tree, and is about finding the next best split point for the subset of training data that falls into a particular leaf.

The code can be found on our git: http://0xdata.github.io/h2o/

(Edit: The repo now is at https://github.com/h2oai/.)

The other parallel GBM implementation is, I think, in xgboost. Its Descriptions says

Extreme Gradient Boosting, which is an efficient implementation of gradient boosting framework. This package is its R interface. The package includes efficient linear model solver and tree learning algorithms. The package can automatically do parallel computation on a single machine which could be more than 10 times faster than existing gradient boosting packages. It supports various objective functions, including regression, classification and ranking. The package is made to be extensible, so that users are also allowed to define their own objectives easily.