If you use the Laplacian like it is in your code (the "real" laplacian), then to cluster your points into two sets you will want the eigenvector corresponding to second smallest eigenvalue.

The intuitive idea is to connect all of your points to each other with springs, where the springs are stiffer if the points are near each other, and less stiff for points far away. The eigenvectors of the Laplacian are the modes of vibration if you hit your spring network with a hammer and watch it oscillate - smaller eigenvalues corresponding to lower frequency "bulk" modes, and larger eigenvalues corresponding to higher frequency oscillations. You want the eigenvalue corresponding to the second smallest eigenvalue, which will be like the second mode in a drum, with a positive clustered together, and negative part clustered together.

Now there is some confusion in the comments about whether to use the largest or smallest eigenvalue, and it is because the laplacian in the paper linked there by dave is slightly different, being the identity minus your laplacian. So there they want the largest ones, whereas you want the smallest. The clustering in the paper is also a bit more advanced, and better, but not as easy to implement.

Here is your code, modified to work:

load rings.mat

[D, N] = size(X); % data stored in X

%initial plot data

figure; hold on;

for i=1:N,

plot(X(1,i), X(2,i),'o');

end

% perform spectral clustering

W = zeros(N,N);

D = zeros(N,N);

sigma = 0.3; % <--- Changed to be smaller

for i=1:N,

for j=1:N,

xixj2 = (X(1,i)-X(1,j))^2 + (X(2,i)-X(2,j))^2 ;

W(i,j) = exp( -1*xixj2 / (2*sigma^2) ) ; % compute weight here

% if (i==j)

% W(i,j)=0;

% end;

end;

D(i,i) = sum(W(i,:)) ;

end;

L = D - W ;

normL = D^-0.5*L*D^-0.5;

[u,s,v] = svd(normL);

% New code below this point

cluster1 = find(u(:,end-1) >= 0);

cluster2 = find(u(:,end-1) < 0);

figure

plot(X(1,cluster1),X(2,cluster1),'.b')

hold on

plot(X(1,cluster2),X(2,cluster2),'.r')

hold off

title(sprintf('sigma=%d',sigma))

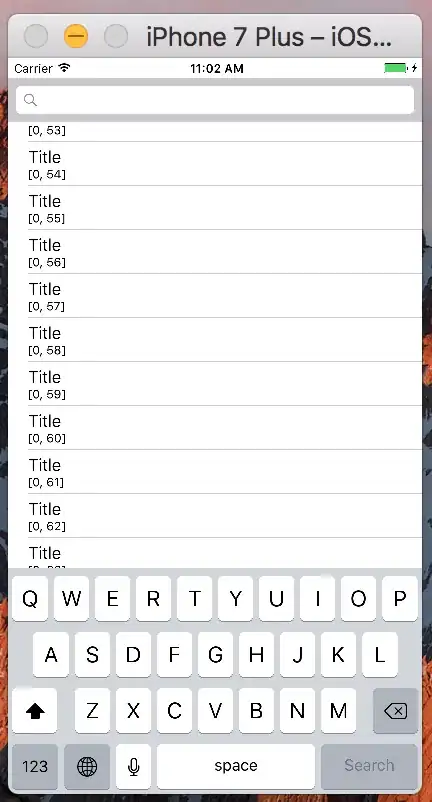

Here is the result:

Now notice that I changed sigma to be smaller - from 1.0 to 0.3. When I left it at 1.0, I got the following result:

which I assume is because with sigma=1, the points in the inner cluster were able to "pull" on the outer cluster (which they are about distance 1 away from) enough so that it was more energetically favorable to split both circles in half like a solid vibrating drum, rather than have two different circles.