I am creating a virtual reality app for android and I would like to generate a Sphere in openGL for my purposes. In a first step, I found this thread(Draw Sphere - order of vertices) where in the first answer there is a good tutorial of how to offline render the sphere. In that same answer, there is a sample code of a sphere(http://pastebin.com/4esQdVPP) that I used for my app, and then I successfully mapped a 2D texture onto the sphere.

However, that sample sphere has a poor resolution and I would like to generate a better one, so I proceeded to follow some blender tutorials to generate the sphere and then export the .obj file and simply take the point coordinates and index and parse them into java code.

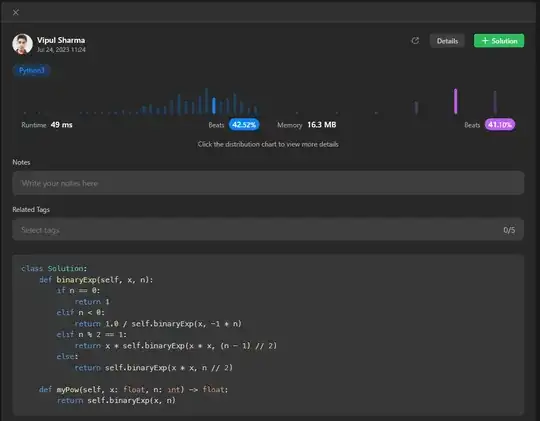

The problem when doing this is that when the texture is added it looks broken at the poles of the sphere, while it looks good in the rest of the sphere (please have a look at the following pictures).

I don't know what i'm doing wrong since the algorithm for mapping the texture should be the same, so I guess that maybe the problem is in the index of the points generated. This is the algorithm im using for mapping the texture: https://en.wikipedia.org/wiki/UV_mapping#Finding_UV_on_a_sphere

This is the .obj file autogenerated with blender: http://pastebin.com/uP3ndM2d

And from there, we extract the index and the coordinates: This is the point index: http://pastebin.com/rt1QjcaX

And this is the point coordinates: http://pastebin.com/h1hGwNfx

Could you give me some advice? Is there anything I am doing wrong?