I know for a 3d reconstruction you can get everything except the scale factor from two images.

But can you calculate where one Point from the first images sits in the second images. The scale factor shouldn't be interesting here?!

sensorCheckPoint.x = (pixelCheckPoint.x - principlePoint.x) / focal;

sensorCheckPoint.y = (pixelCheckPoint.y - principlePoint.y) / focal;

sensorCheckPoint.z = 1;

sesorCheckPointNew = R * sensorCheckPoint + t;

I got R and t by decomposing the Essential Mat with recoverPose(); But the new Point doesn't even sit in the image.

Could someone tell me if I'm thinking wrong? Thanks

EDIT

I only know the pixel coordinates from the checkPoint not the real 3d coordinates

EDIT2

If you know R and t but not the length from t. It should be possible to assume a z1 for a Point M known in both images and then get a the resulting t. Right? Then it should be possible to recalculate for every point in the first image where it sits in the second.

z2 is then in correspondence with t. But what is then the dependency between z2 and t?

EDIT3

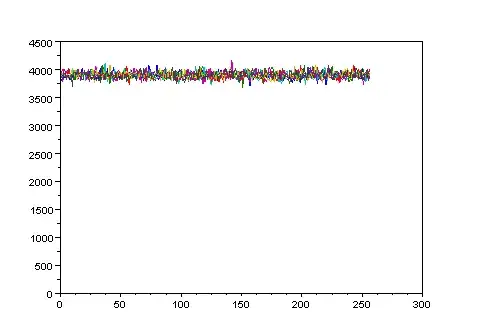

If I just assume z=1 for M1. And I calculate R and t from the two images. Then I know everything what green is. Therefore I need to solve this linear equations to get s and to get the real t.

I use the the first two lines two solve the two variables. But the outcome doesn't seem write.

Is the equation not correct?