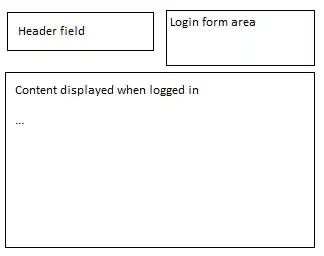

Please take a look at picture below :

My Objective is that the agent rotating and moving in the environment and not falling in fire holes, I have think like this :

Do for 1000 episodes:

An Episode :

start to traverse the environment;

if falls into a hole , back to first place !

So I have read some where : goal is an end point for an episode , So if we think that goal is not to fall in fires , the opposite of the goal (i.e. putting in fire holes) will be end point of an episode . what you will suggest for goal setting ?

Another question is that why should I set the reward matrix ? I have read that Q Learning is Model Free ! I know that In Q Learning we will setup the goal and not the way for achieving to it . ( in contrast to supervised learning.)