I am in the process of installing ELK including REDIS and have successfully got one server/process delivering its logs through to ElasticSearch(ES). Most happy with this. However, on updating an existing server/process to start using logstash I am seeing the logdate come through in the form yyyy-MM-dd HH:mm:ss,sss. Note the absence of the T between date and time. ES is not happy with this.

Log4j pattern in use by both servers is:

<PatternLayout pattern="~%d{ISO8601} [%p] [%t] [%c{1.}] %m%n"/>

Logstash config is identical with the exception of the path to the source log file

input{

file{

type => "log4j"

path => "/var/log/restapi/*.log"

add_field => {

"process" => "restapi"

"environment" => "DEVELOPMENT"

}

codec => multiline {

pattern => "^~%{TIMESTAMP_ISO8601} "

negate => "true"

what => "previous"

}

}

}

filter{

if [type] == "log4j"{

grok{

match => {

message => "~%{TIMESTAMP_ISO8601:logdate}%{SPACE}\[%{LOGLEVEL:level}\]%{SPACE}\[%{DATA:thread}\]%{SPACE}\[%{DATA:category}\]%{SPACE}%{GREEDYDATA:messagetext}"

}

}

}

}

output{

redis{

host => "sched01"

data_type => "list"

key => "logstash"

codec => json

}

stdout{codec => rubydebug}

}

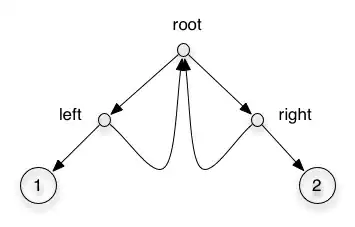

The stdout line is for current debug purposes, whereby it is evident that on correctly working server that logdate is being correctly formed by the GROK filter.

compared to the incorrectly formed output.

The only difference from a high level is when the servers were built. Looking for ideas on what could be causing or a means to add the T into the field