I have a set of points along X and Y where I want to create bins on X for small ranges and calculate the percetile for each bin to create a polynomial regression fit for all the bins and have a continuous percentile approach. The problem is on the edges. The number of points on the edge bins is lower and due to this problem, the percentile values are distorted.

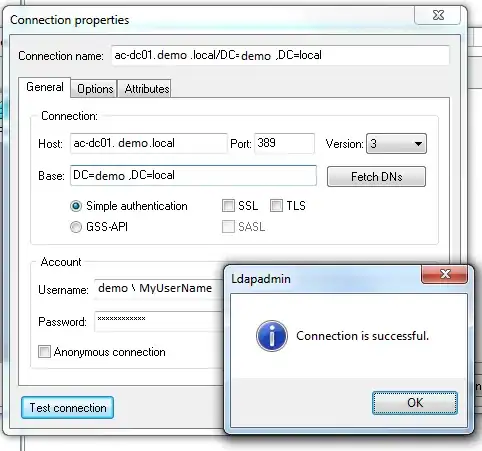

The folowing image and the code shows how everything is done. The percentile values as you can see are calculated for a maximum defined on 99.8 and a minimum defined on 4.0:

import numpy as np

import matplotlib.pyplot as plt

###############################

degree = 8

step = 0.05

numPercUp = 99.8

numPercDown = 4.0

###############################

fig = plt.figure(figsize=(8, 6))

dataX = np.random.uniform(low=0.14, high=2.06, size=(1000))

dataY = np.random.uniform(low=50, high=550, size=(1000))

plt.scatter(dataX, dataY, c='b', s=5, marker="+", label="data")

xMin = np.min(dataX)

xMax = np.max(dataX)

print 'xMin: ', xMin

print 'xMax: ', xMax

xMin = (int(xMin / step)+1) * step

xMax = (int(xMax / step)+1) * step

print 'xMin: ', xMin

print 'xMax: ', xMax

bins = np.arange(xMin, xMax, step)

inds = np.digitize(dataX, bins) # http://stackoverflow.com/questions/2275924/how-to-get-data-in-a-histogram-bin

print 'bins: ', bins, bins[0], bins[-1], len(bins)

print 'inds: ', np.min(inds), np.max(inds), np.sum(inds == 0)

# Percentile coordinates

percX = np.arange(xMin, xMax+step, step) - step/2 # All bin X position centered on the bin

percUp = np.zeros(len(bins)+1)

percDown = np.zeros(len(bins)+1)

for i in range(len(bins)+1):

dataBin = dataY[inds == i]

percUp[i] = np.percentile(dataBin, numPercUp)

percDown[i] = np.percentile(dataBin, numPercDown)

print 'percX: ', percX

print 'percUp: ', percUp

plt.plot(percX, percUp, color='green', linestyle='-', linewidth=2, marker="o", markerfacecolor='red', markersize=5, label="Up perc.")

plt.plot(percX, percDown, color='green', linestyle='-', linewidth=2, marker="o", markerfacecolor='red', markersize=5, label="Down perc.")

# Polynomial Regression

z = np.polyfit(percX, percUp, degree)

f = np.poly1d(z)

x_new = np.linspace(0.1, 2.1, 50)

y_new = f(x_new)

plt.plot(x_new, y_new, 'r--')

z = np.polyfit(percX, percDown, degree)

f = np.poly1d(z)

x_new = np.linspace(0.1, 2.1, 50)

y_new = f(x_new)

plt.plot(x_new, y_new, 'r--')

# Frame specification

plt.xlabel('X')

plt.ylabel('Y')

plt.title('Perc. Min/Max')

plt.grid()

plt.legend()

plt.axis([0, 2.2, 0, 600])

plt.xticks(np.arange(0, 2.21, 0.1))

plt.yticks(np.arange(0, 600+1, 50))

plt.show()

I find 3 possibilities, but none of them convince me at all:

- Make bigger bins instead of 0.05, but this will make to reduce accuracy.

- Reduce the degree of the polynomial fit, but on the real data (see final image) it also reduces the accuracy and does not fit to expected.

- Delete the edge values before fitting the polynomial regression (maybe this one is the most convincing option for me)

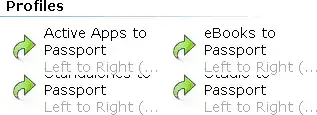

The following image is a real dataset example that I have. So as you can see, low degrre fit polynomial cannot be used, and make bigger bins want make to be much better on the right side (high x).

Which could be a good solution? Maybe forget about continuous and look for gaussian filter and then use nearest on X to define a smooth regression line?