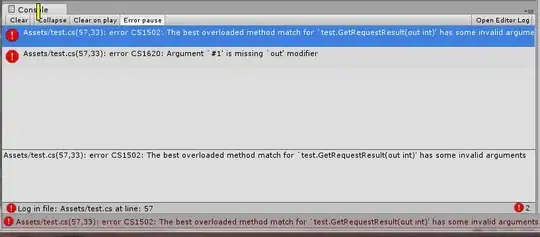

I have a pretty good nginx configuration and I am trying to test the load for 1000 requests in 10 seconds for loading index.html page from nginx with https connection. For all the 1000 samples, latency and connect time is consistent. But the response time is ok for the first few samples, but its really really bad for the remaining samples. I am attaching the samples. Please see the first screenshot. You can see the entries are consistent. But in 2nd screenshot, you can find the response time getting larger and larger as it progresses. What could be the reason for this?????

is it because of the hardware port limit ( 32000 - 61000 ) ? But for virtual hosted machine, I am not allowed to edit this.

is there any file descriptor open limit for virtual hosted machine? You can see that I set ulimit -a and -n to be around 200000. Will this work for a virtual machine?

Do I have to make anymore adjustment in nginx configuration to make the response time consistent ?

I am trying this HTTPS connection, is it b/c of the fact that each connection taking time in encryption and system getting busy with cpu cycles ? I am just trying to understand whether the problem is in hardware / software level ?

My Hardware is Virtual Hosted. Below is the configuration

- Architecture: x86_64

- CPU op-mode(s): 32-bit, 64-bit

- Byte Order: Little Endian

- CPU(s): 16

- Vendor ID: GenuineIntel

- CPU family: 6

- Model: 26

- Stepping: 5

- CPU MHz: 2266.802

- BogoMIPS: 4533.60

- Virtualization: VT-x

This is the nginx configuration:

server {

listen 80;

server_name xxxx;

# Strict Transport Security

add_header Strict-Transport-Security max-age=2592000;

rewrite ^/.*$ https://$host$request_uri? permanent;

}

server {

listen 443 ssl;

server_name xxxx;

location / {

try_files $uri $uri/ @backend;

}

## default location ##

location @backend {

proxy_buffering off;

proxy_pass http://glassfish_servers;

proxy_http_version 1.1;

proxy_set_header Connection "";

# force timeouts if the backend dies

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

#proxy_redirect off;

# set headers

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto https;

}

ssl_certificate /etc/nginx/ssl/ssl-bundle.crt;

ssl_certificate_key /etc/nginx/ssl/xxxx.key;

ssl_session_cache shared:SSL:20m;

ssl_session_timeout 10m;

ssl_prefer_server_ciphers on;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS;

}

This is the aggregate report I collected: