I have been experiencing a strange behaviour when I launch 2 instances of a kernel in order to run at the same time while sharing the GPU resources.

I have developed a CUDA kernel which aims to run in a single SM (Multiprocessor) where the threads perform an operation several times (with a loop).

The kernel is prepared to create only a block, therefore to use only one SM.

simple.cu

#include <cuda_runtime.h>

#include <stdlib.h>

#include <stdio.h>

#include <helper_cuda.h>

using namespace std;

__global__ void increment(float *in, float *out)

{

int it=0, i = blockIdx.x * blockDim.x + threadIdx.x;

float a=0.8525852f;

for(it=0; it<99999999; it++)

out[i] += (in[i]+a)*a-(in[i]+a);

}

int main( int argc, char* argv[])

{

int i;

int nBlocks = 1;

int threadsPerBlock = 1024;

float *A, *d_A, *d_B, *B;

size_t size=1024*13;

A = (float *) malloc(size * sizeof(float));

B = (float *) malloc(size * sizeof(float));

for(i=0;i<size;i++){

A[i]=0.74;

B[i]=0.36;

}

cudaMalloc((void **) &d_A, size * sizeof(float));

cudaMalloc((void **) &d_B, size * sizeof(float));

cudaMemcpy(d_A, A, size, cudaMemcpyHostToDevice);

cudaMemcpy(d_B, B, size, cudaMemcpyHostToDevice);

increment<<<nBlocks,threadsPerBlock>>>(d_A, d_B);

cudaDeviceSynchronize();

cudaMemcpy(B, d_B, size, cudaMemcpyDeviceToHost);

free(A);

free(B);

cudaFree(d_A);

cudaFree(d_B);

cudaDeviceReset();

return (0);

}

So if I execute the kernel:

time ./simple

I get

real 0m36.659s

user 0m4.033s

sys 0m1.124s

Otherwise, If I execute two instances:

time ./simple & time ./simple

I get for each process:

real 1m12.417s

user 0m29.494s

sys 0m42.721s

real 1m12.440s

user 0m36.387s

sys 0m8.820s

As far as I know, the executions should run concurrently lasting as one (about 36 seconds). However, they last twice the base time. We know that the GPU has 13 SMs, each one should execute one block, thus the kernels only create 1 block.

Are they being executed in the same SM?

Shouldn’t they running concurrently in different SMs?

EDITED

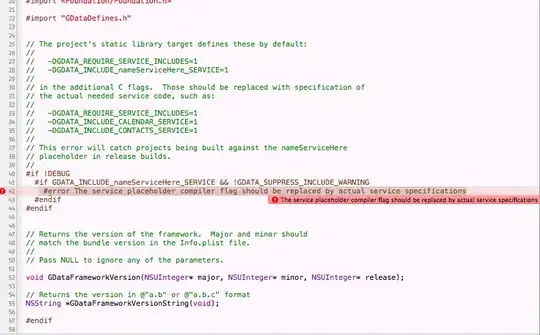

In order to make me clearer I will attach the profiles of the concurrent execution, obtained from nvprof:

Now, I would like to show you the behavior of the same scenario but executing concurrently two instances of matrixMul sample:

As you can see, in the first scenario, a kernel waits for the other to finish. While, in the second scenario (matrixMul), kernels from both contexts are running at the same time.

Thank you.