Python version: 2.7.10

My code:

# -*- coding: utf-8 -*-

from urllib2 import urlopen

from bs4 import BeautifulSoup

from collections import OrderedDict

import re

import string

def cleanInput(input):

input = re.sub('\n+', " ", input)

input = re.sub('\[[0-9]*\]', "", input)

input = re.sub(' +', " ", input)

# input = bytes(input, "UTF-8")

input = bytearray(input, "UTF-8")

input = input.decode("ascii", "ignore")

cleanInput = []

input = input.split(' ')

for item in input:

item = item.strip(string.punctuation)

if len(item) > 1 or (item.lower() == 'a' or item.lower() == 'i'):

cleanInput.append(item)

return cleanInput

def ngrams(input, n):

input = cleanInput(input)

output = []

for i in range(len(input)-n+1):

output.append(input[i:i+n])

return output

url = 'https://en.wikipedia.org/wiki/Python_(programming_language)'

html = urlopen(url)

bsObj = BeautifulSoup(html, 'lxml')

content = bsObj.find("div", {"id": "mw-content-text"}).get_text()

ngrams = ngrams(content, 2)

keys = range(len(ngrams))

ngramsDic = {}

for i in range(len(keys)):

ngramsDic[keys[i]] = ngrams[i]

# ngrams = OrderedDict(sorted(ngrams.items(), key=lambda t: t[1], reverse=True))

ngrams = OrderedDict(sorted(ngramsDic.items(), key=lambda t: t[1], reverse=True))

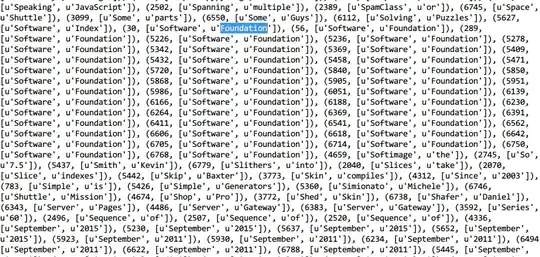

print ngrams

print "2-grams count is: " + str(len(ngrams))

I recently learning how to do web scraping by following the book Web Scraping with Python: Collecting Data from the Modern Web, while in Chapter 7 Data Normalization section I first write the code as same as the book shows and got an error from the terminal:

Traceback (most recent call last):

File "2grams.py", line 40, in <module>

ngrams = OrderedDict(sorted(ngrams.items(), key=lambda t: t[1], reverse=True))

AttributeError: 'list' object has no attribute 'items'

Therefore I've changed the code by creating a new dictionary where the entities are the lists of ngrams. But I've got a quite different result:

Question:

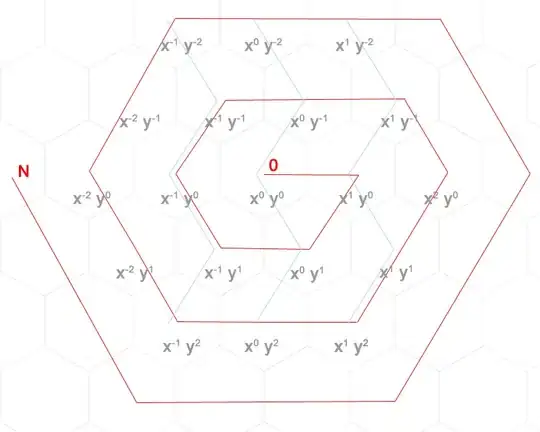

- If I wanna have the result as the book shows (where sorted by values and the frequency), should I write my own lines to count the occurrence of each 2-grams, or the code in the book already had that function (codes in the book were python 3 code) ? book sample code on github

- The frequency in my output was quite different with the author's, for example

[u'Software', u'Foundation']were occurred 37 times but not 40. What kinds of reasons causing that difference (could it be my code errors)?

Book screenshot: