Here is my HTML code:

<ul class="asidemenu_h1">

<li class="top">

<h3>Mobiles</h3>

</li>

<li>

<a href="http://www.mega.pk/mobiles-apple/" title="Apple Mobiles Price">Apple</a>

</li>

<li>

<a href="http://www.mega.pk/mobiles-asus/" title="Asus Mobiles Price">Asus</a>

</li>

<li>

<a href="http://www.mega.pk/mobiles-black_berry/" title="Black Berry Mobiles Price">Black Berry</a>

</li>

</ul>

<ul class="start2" id="start2ul63" style="visibility: hidden; opacity: 0;">

<li>

<h3>Mobiles</h3>

<ul class="start3 bolder-star">

<li>

<a href="http://www.mega.pk/mobiles-apple/">Apple</a>

</li>

<li>

<a href="http://www.mega.pk/mobiles-asus/">Asus</a>

</li>

<li>

<a href="http://www.mega.pk/mobiles-black_berry/">Black Berry</a>

</li>

</ul>

</li>

</ul>

Well I have a solution to this which is Regex but that also selects all 6 links where i only need the first occurrence of all links.

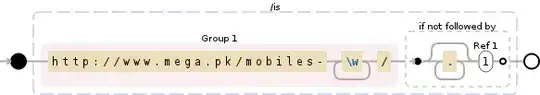

Here is my regex:

"/http:\/\/www\.mega\.pk\/mobiles-[A-z]+\//g"

Another soulution is xpath but that also doesn't work. Problem is when I write @href it return me nothing but without it i can see all the anchor tags in chrome console.

My xpath is:

$x('//*[@id="start2ul63"]/li[1]/ul/li/a[contains(@href,"mobiles-")]/@href')

My Python code:

from scrapy.selector import Selector

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

from ScrapyScraper.items import ScrapyscraperItem

class ScrapyscraperSpider(CrawlSpider) :

name = "rs"

allowed_domains = ["mega.pk"]

start_urls = ["http://www.mega.pk/mobiles-apple/",

"http://www.mega.pk/mobiles-asus/",

"http://www.mega.pk/mobiles-black_berry/",

"http://www.mega.pk/mobiles-gfive/",

"http://www.mega.pk/mobiles-gright/",

"http://www.mega.pk/mobiles-haier/",

"http://www.mega.pk/mobiles-htc/",

"http://www.mega.pk/mobiles-huawei/",

"http://www.mega.pk/mobiles-lenovo/",

"http://www.mega.pk/mobiles-lg/",

"http://www.mega.pk/mobiles-motorola/",

"http://www.mega.pk/mobiles-nokia/",

"http://www.mega.pk/mobiles-oneplus/",

"http://www.mega.pk/mobiles-oppo/",

"http://www.mega.pk/mobiles-qmobile/",

"http://www.mega.pk/mobiles-rivo/",

"http://www.mega.pk/mobiles-samsung/",

"http://www.mega.pk/mobiles-sony/",

"http://www.mega.pk/mobiles-voice/"]

rules = (

Rule(LinkExtractor(allow = ("http:\/\/www\.mega\.pk\/mobiles_products\/\d+\/[A-z-\w.]+",)), callback = 'parse_item', follow = True),

)

def parse_item(self, response) :

sel = Selector(response)

item = ScrapyscraperItem()

item['Heading'] = sel.xpath('//*[@id="main1"]/div[1]/div[1]/div/div[2]/div[2]/div/div[1]/h2/span/text()').extract()

item['Content'] = sel.xpath('//*[@id="main1"]/div[1]/div[1]/div/div[2]/div[2]/div/p/text()').extract()

item['Price'] = sel.xpath('//*[@id="main1"]/div[1]/div[1]/div/div[2]/div[2]/div/div[2]/div[1]/div[2]/span/text()').extract()

item['WiFi'] = sel.xpath('//*[@id="laptop_detail"]//tr/td[contains(. ,"Wireless")]/following-sibling::td[1]/span/text()').extract()

item['Battery'] = sel.xpath('(//*[@id="laptop_detail"]//tr/td[contains(. ,"Battery")])[1]/following-sibling::td[1]/text()').extract()

item['Band2G'] = sel.xpath('//*[@id="laptop_detail"]//tr/td[contains(. ,"2G (GSM)")]/following-sibling::td[1]/span/text()').extract()

item['Band3G'] = sel.xpath('//*[@id="laptop_detail"]//tr/td[contains(. ,"3G (UMTS)")]/following-sibling::td[1]/span/text()').extract()

item['Band4G'] = sel.xpath('(//*[@id="laptop_detail"]//tr/td[contains(. ,"4G LTE")])[1]/following-sibling::td[1]/span/text()').extract()

item['Screensize'] = sel.xpath('(//*[@id="laptop_detail"]//tr/td[contains(. ,"Screen size")])[1]/following-sibling::td[1]/text()').extract()

item['Storage'] = sel.xpath('//*[@id="laptop_detail"]//tr/td[contains(. ,"Internal storage space")]/following-sibling::td[1]/text()').extract()

item['CameraStatus'] = sel.xpath('//*[@id="laptop_detail"]//tr/td[contains(. ,"Built-in c")]/following-sibling::td[1]/span/text()').extract()

if (item['CameraStatus'] == [u'Yes']) :

item['BCamera'] = sel.xpath('(//*[@id="laptop_detail"]//tr/td[contains(. ,"Camera Pixels")])[1]/following-sibling::td[1]/text()').extract()

item['NofCamera'] = sel.xpath('substring(//*[@id="laptop_detail"]//tr/td[contains(., "Number of cam")]/following-sibling::td[1], 1, 1)').extract()

if (item['NofCamera'] == [u'2']) :

item['FCamera'] = sel.xpath('//*[@id="laptop_detail"]//tr/td[contains(. ,"Resolution 2nd c")]/following-sibling::td[1]/text()').extract()

else :

pass

else :

item['CameraStatus'] = sel.xpath('//*[@id="laptop_detail"]//tr/td[contains(. ,"Built-in c")]/following-sibling::td[1]/span/text()').extract()

return item