By working worse, I mean even a higher training error.

# Boosted SVC

clf = AdaBoostClassifier(base_estimator=SVC(random_state=1), random_state=1, algorithm="SAMME", n_estimators=5)

clf.fit(X, y)

# Only SVC

clf = SVC()

clf.fit(X, y)

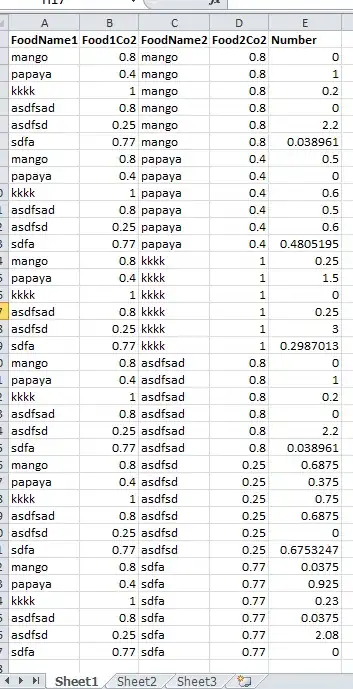

My training data is

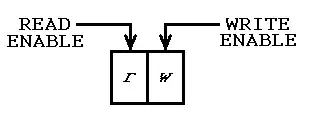

And the result of SVM: