We're on Cassandra 2.0.15, and seeing huge read latencies (>60sec) coming up at regular intervals (about every 3min), from all app hosts. We measure this latency around calls to session.execute(stmt). At the same time, Cassandra traces report duration of <1s. We also ran, in a loop, a query via cqlsh from the same hosts during those peak latency times, and cqlsh always came back within 1s. What can explain this discrepancy at the Java driver level?

-- edit: in reply to comments --

Cassandra servers JVM settings: -XX:+CMSClassUnloadingEnabled -XX:+UseThreadPriorities -XX:ThreadPriorityPolicy=42 -XX:+HeapDumpOnOutOfMemoryError -Xss256k -XX:StringTableSize=1000003 -Xms32G -Xmx32G -XX:+UseG1GC -Djava.net.preferIPv4Stack=true -Dcassandra.jmx.local.port=7199 -XX:+DisableExplicitGC.

Client side GC is negligible (below). Client settings: -Xss256k -Xms4G -Xmx4G, Cassandra driver version is 2.1.7.1

Client side measuring code:

val selectServiceNames = session.prepare(QueryBuilder.select("service_name").from("service_names"))

override def run(): Unit = {

val start = System.currentTimeMillis()

try {

val resultSet = session.execute(selectServiceNames.bind())

val serviceNames = resultSet.all()

val elapsed = System.currentTimeMillis() - start

latency.add(elapsed) // emits metric to statsd

if (elapsed > 10000) {

log.info("Canary2 sensed high Cassandra latency: " + elapsed + "ms")

}

} catch {

case e: Throwable =>

log.error(e, "Canary2 select failed")

} finally {

Thread.sleep(100)

schedule()

}

}

Cluster construction code:

def createClusterBuilder(): Cluster.Builder = {

val builder = Cluster.builder()

val contactPoints = parseContactPoints()

val defaultPort = findConnectPort(contactPoints)

builder.addContactPointsWithPorts(contactPoints)

builder.withPort(defaultPort) // This ends up config.protocolOptions.port

if (cassandraUsername.isDefined && cassandraPassword.isDefined)

builder.withCredentials(cassandraUsername(), cassandraPassword())

builder.withRetryPolicy(ZipkinRetryPolicy.INSTANCE)

builder.withLoadBalancingPolicy(new TokenAwarePolicy(new LatencyAwarePolicy.Builder(new RoundRobinPolicy()).build()))

}

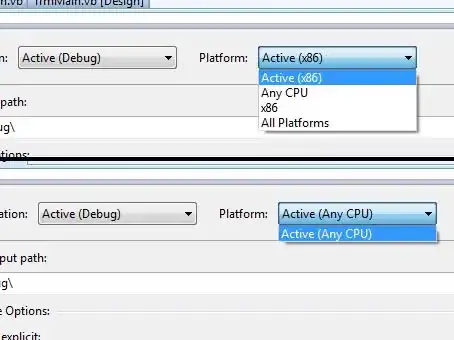

One more observation I cannot explain. I ran two threads that execute the same query in the same manner (as above) in a loop, the only difference is yellow thread sleeps 100millisec between queries, and green thread sleeps 60sec between queries. Green thread hits low latency (under 1s) much more often than the yellow one.