I have a bottleneck, which looks like this:

void function(int type) {

for (int i = 0; i < m; i++) {

// do some stuff A

switch (type) {

case 0:

// do some stuff 0

break;

[...]

case n:

// do some stuff n

break;

}

// do some stuff B

}

}

n and m are large enough.

m millions, sometimes hundreds of millions.

n is the 2 ^ 7 - 2 ^ 10 (128 - 1024)

Chunks of code A and B are sufficiently large.

I rewrote the code (via macros) as follows:

void function(int type) {

switch (type) {

case 0:

for (int i = 0; i < m; i++) {

// do some stuff A

// do some stuff 0

// do some stuff B

}

break;

[...]

case n:

for (int i = 0; i < m; i++) {

// do some stuff A

// do some stuff n

// do some stuff B

}

break;

}

}

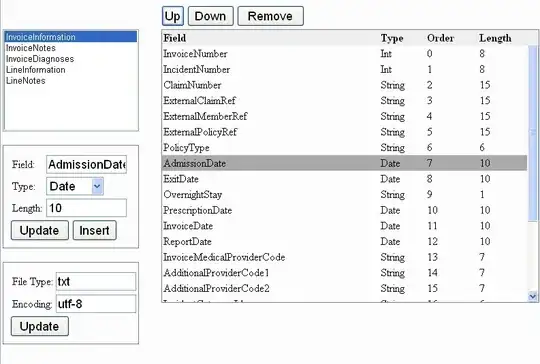

As a result, it looks like this in IDA for this function:

Is there a way to remove the switch from the loop:

- without creating a bunch of copies of the loop

- not create huge function with macros

- without losing performance?

A possible solution seems to me the presence of goto variable. Something like this:

void function(int type) {

label* typeLabel;

switch (type) {

case 0:

typeLabel = &label_1;

break;

[...]

case n:

typeLabel = &label_n;

break;

}

for (int i = 0; i < m; i++) {

// do some stuff A

goto *typeLabel;

back:

// do some stuff B

}

goto end;

label_1:

// do some stuff 0

goto back;

[...]

label_n:

// do some stuff n

goto back;

end:

}

The matter is also complicated by the fact that all of this will be carried out on different Android devices with different speeds.

Architecture as ARM, and x86.

Perhaps this can be done assembler inserts rather than pure C?

EDIT:

I run some tests. n = 45,734,912

loop-within-switch: 891,713 μs

switch-within-loop: 976,085 μs

loop-within-switch 9.5% faster from switch-within-loop

For example: simple realisation without switch takes 1,746,947 μs