Following my previous question titled: "Random sampling from a dataset, while preserving original probability distribution", I want to sample from a set of >2000 numbers, gathered from measurement. I want to perform several tests (I take maximum of 10 samples in each tests), while preserving probability distribution in overall testiong process, and in each test (as much as possible). Now, instead of completely random sampling, I partition data into 5 quantiles, and in 10 tests, I sample 2 data elements from each quantile, using a uniformly random distribution for the array of data in each quantile.

The problem with the completely random sampling was that as the distribution of data is long-tailed, I was getting almost the same values in each test. I want some small value samples, some middle value samples, and some big value samples in each test. So I sampled as described.

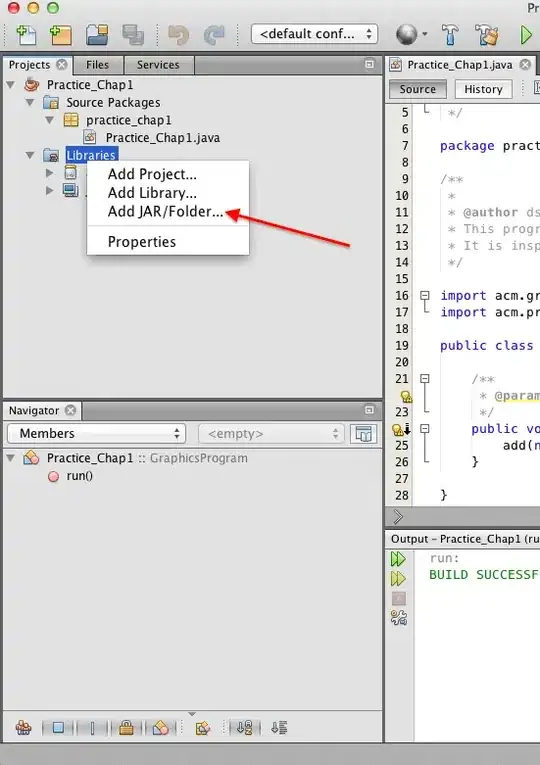

Fig 1. Density plot of ~2k elements of data.

This is the R code for calculating quantiles:

q=quantile(data, probs = seq(0, 1, by= 0.1))

And then I partition data into 5 quantiles (each one as an array) and sample from each partition. For example, I do this in Java:

public int getRandomData(int quantile) {

int data[][] = {1,2,3,4,5}

,{6,7,8,9,10}

,{11,12,13,14,15}

,{16,17,18,19,20}

,{21,22,23,24,25}};

length=data[quantile][].length;

Random r=new Random();

int randomInt = r.nextInt(length);

return data[quantile][randomInt];

}

So, does the samples for each tests and all tests overall, preserve the characteristics of the original distribution, for example mean and variance? If not, how to arrange sampling to achieve this goal?