Here's a small test program that warps with transparent "border", then copies the warped image to a solid background.

int main()

{

cv::Mat input = cv::imread("../inputData/Lenna.png");

cv::Mat transparentInput, transparentWarped;

cv::cvtColor(input, transparentInput, CV_BGR2BGRA);

//transparentInput = input.clone();

// create sample transformation mat

cv::Mat M = cv::Mat::eye(2,3, CV_64FC1);

// as a sample, just scale down and translate a little:

M.at<double>(0,0) = 0.3;

M.at<double>(0,2) = 100;

M.at<double>(1,1) = 0.3;

M.at<double>(1,2) = 100;

// warp to same size with transparent border:

cv::warpAffine(transparentInput, transparentWarped, M, transparentInput.size(), CV_INTER_LINEAR, cv::BORDER_TRANSPARENT);

// NOW: merge image with background, here I use the original image as background:

cv::Mat background = input;

// create output buffer with same size as input

cv::Mat outputImage = input.clone();

for(int j=0; j<transparentWarped.rows; ++j)

for(int i=0; i<transparentWarped.cols; ++i)

{

cv::Scalar pixWarped = transparentWarped.at<cv::Vec4b>(j,i);

cv::Scalar pixBackground = background.at<cv::Vec3b>(j,i);

float transparency = pixWarped[3] / 255.0f; // pixel value: 0 (0.0f) = fully transparent, 255 (1.0f) = fully solid

outputImage.at<cv::Vec3b>(j,i)[0] = transparency * pixWarped[0] + (1.0f-transparency)*pixBackground[0];

outputImage.at<cv::Vec3b>(j,i)[1] = transparency * pixWarped[1] + (1.0f-transparency)*pixBackground[1];

outputImage.at<cv::Vec3b>(j,i)[2] = transparency * pixWarped[2] + (1.0f-transparency)*pixBackground[2];

}

cv::imshow("warped", outputImage);

cv::imshow("input", input);

cv::imwrite("../outputData/TransparentWarped.png", outputImage);

cv::waitKey(0);

return 0;

}

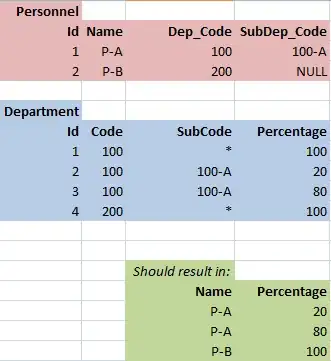

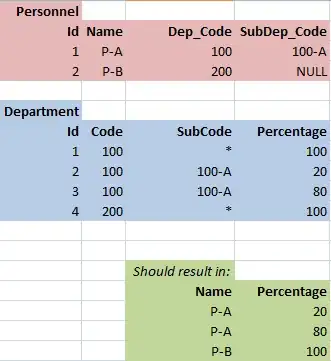

I use this as input:

and get this output:

which looks like ALPHA channel isn't set to ZERO by warpAffine but to something like 205...

But in general this is the way I would do it (unoptimized)