Let's say I have a number x which is a power of two that means x = 2^i for some i. So the binary representation of x has only one '1'. I need to find the index of that one. For example, x = 16 (decimal) x = 10000 (in binary) here index should be 4. Is it possible to find the index in O(1) time by just using bit operation?

-

1`log_2(x)` (15 chars) – amit Aug 23 '15 at 08:52

-

1`Is it possible to find the index in O(1) time by just using bit operation?` Well, if you assume fixed number of bits in the integer, naive approach (iterate while dividing by 2) is constant time. Otherwise, since the output is `log(n)`, and you need `log(log(n))` bits to write this number - no, it cannot be done in constant time. Maybe you meant sub logarithmic time? – amit Aug 23 '15 at 08:54

-

[Find the integer log base 2 of an integer with an 64-bit IEEE float](http://graphics.stanford.edu/~seander/bithacks.html#IntegerLogIEEE64Float) et seq. - take your pick. – Andrew Morton Aug 23 '15 at 08:55

-

The only way to do it in O(1) is with a lookup table. This might be impractical for 32-bit integers, but you can for example make a lookup table for 8-bit integers and then combine it to get the answer to larger integers. Still not O(1), but somewhat better. – Filipe Gonçalves Aug 23 '15 at 09:00

-

@Shadekur Rahman Is a lookup-table permissible? – njuffa Aug 23 '15 at 09:08

-

@FilipeGonçalves if you can do it in O(1) table lookups, then you can also do it in O(1) arithmetic operations because apparently you have O(1) bits so there isn't even an `n` to worry about – harold Aug 23 '15 at 09:10

-

hash table or look up table that you say is most probably a O(1) operation. But it is tough to implement. – Shadekur Rahman Aug 23 '15 at 09:25

5 Answers

The following is an explanation of the logic behind the use of de Bruijn sequences in the O(1) code of the answers provided by @Juan Lopez and @njuffa (great answers by the way, you should consider upvoting them).

The de Bruijn sequence

Given an alphabet K and a length n, a de Bruijn sequence is a sequence of characters from K that contains (in no particular order) all permutations with length n in it [1], for example, given the alphabet {a, b} and n = 3, the following is a list all permutations (with repetitions) of {a, b} with length 3:

[aaa, aab, aba, abb, baa, bab, bba, bbb]

To create the associated de Bruijn sequence we construct a minimum string that contains all these permutations without repetition, one of such strings would be: babbbaaa

"babbbaaa" is a de Bruijn sequence for our alphabet K = {a, b} and n = 3, the notation to represent this is B(2, 3), where 2 is the size of K also denoted as k. The size of the sequence is given by kn, in the previous example kn = 23 = 8

How can we construct such a string? One method consist on building a directed graph where every node represents a permutation and has an outgoing edge for every letter in the alphabet, the transition from one node to another adds the edge letter to the right of the next node and removes its leftmost letter. Once the graph is built grab the edges in a Hamiltonian path over it to construct the sequence.

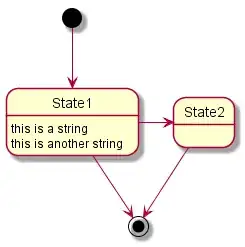

The graph for the previous example would be:

Then, take a Hamiltonian path (a path that visits each vertex exactly once):

Starting from node aaa and following each edge, we end up having:

(aaa) -> b -> a -> b -> b -> b -> a -> a -> a (aaa) = babbbaaa

We could have started from the node bbb in which case the obtained sequence would have been "aaababbb".

Now that the de Bruijn sequence is covered, let's use it to find the number of leading zeroes in an integer.

The de Bruijn algorihtm [2]

To find out the number of leading zeroes in an integer value, the first step in this algorithm is to isolate the first bit from right to left, for example, given 848 (11010100002):

isolate rightmost bit

1101010000 ---------------------------> 0000010000

One way to do this is using x & (~x + 1), you can find more info on how this expression works on the Hacker's Delight book (chapter 2, section 2-1).

The question states that the input is a power of 2, so the rightmost bit is isolated from the beginning and no effort is required for that.

Once the bit is isolated (thus converting it in a power of two), the second step consist on using a hash table approach along with its hash function to map the filtered input with its corresponding number of leading 0's, p.e., applying the hash function h(x) to 00000100002 should return the the index on the table that contains the value 4.

The algorithm proposes the use of a perfect hash function highlighting these properties:

- the hash table should be small

- the hash function should be easy to compute

- the hash function should not produce collisions, i.e., h(x) ≠ h(y) if x ≠ y

To achieve this, we could use a de Bruijn sequence, with an alphabet of binary elements K = {0, 1}, with n = 6 if we want to solve the problem for 64 bit integers (for 64 bit integers, there are 64 possible power of two values and 6 bits are required to count them all). B(2, 6) = 64, so we need to find a de Bruijn sequence of length 64 that includes all permutations (with repetition) of binary digits with length 6 (0000002, 0000012, ..., 1111112).

Using a program that implements a method like the one described above you can generate a de Bruijn sequence that meets the requirement for 64 bits integers:

00000111111011011101010111100101100110100100111000101000110000102 = 7EDD5E59A4E28C216

The proposed hashing function for the algorithm is:

h(x) = (x * deBruijn) >> (k^n - n)

Where x is a power of two. For every possible power of two within 64 bits, h(x) returns a corresponding binary permutation, and we need to associate every one of these permutations with the number of leading zeroes to fill the table. For example, if x is 16 = 100002, which has 4 leading zeroes, we have:

h(16) = (16 * 0x7EDD5E59A4E28C2) >> 58

= 9141566557743385632 >> 58

= 31 (011111b)

So, at index 31 of our table, we store 4. Another example, let's work with 256 = 1000000002 which has 8 leading zeroes:

h(256) = (256 * 0x7EDD5E59A4E28C2) >> 58

= 17137856407927308800 (due to overflow) >> 58

= 59 (111011b)

At index 59, we store 8. We repeat this process for every power of two until we fill up the table. Generating the table manually is unwieldy, you should use a program like the one found here for this endeavour.

At the end we'd end up with the following table:

int table[] = {

63, 0, 58, 1, 59, 47, 53, 2,

60, 39, 48, 27, 54, 33, 42, 3,

61, 51, 37, 40, 49, 18, 28, 20,

55, 30, 34, 11, 43, 14, 22, 4,

62, 57, 46, 52, 38, 26, 32, 41,

50, 36, 17, 19, 29, 10, 13, 21,

56, 45, 25, 31, 35, 16, 9, 12,

44, 24, 15, 8, 23, 7, 6, 5

};

And the code to calculate the required value:

// Assumes that x is a power of two

int numLeadingZeroes(uint64_t x) {

return table[(x * 0x7EDD5E59A4E28C2ull) >> 58];

}

What warranties that we are not missing an index for a power of two due to collision?

The hash function basically obtains every 6 bits permutation contained in the de Bruijn sequence for every power of two, the multiplication by x is basically just a shift to the left (multiplying a number by a power of two is the same as left shifting the number), then the right shift 58 is applied, isolating the 6 bits group one by one, no collision will appear for two different values of x (the third property of the desired hash function for this problem) thanks to the de Bruijn sequence.

References:

[1] De Bruijn Sequences - http://datagenetics.com/blog/october22013/index.html

[2] Using de Bruijn Sequences to Index a 1 in a Computer Word - http://supertech.csail.mit.edu/papers/debruijn.pdf

[3] The Magic Bitscan - http://elemarjr.net/2011/01/09/the-magic-bitscan/

-

Best answer IMO. I just find it extra funny because the reference [3] is from a friend of mine, who was inspired to write about it because we were writing a chess engine and I wrote this code for LSB using De Bruijn sequences. :) – Juan Lopes Aug 24 '15 at 12:33

-

@JuanLopes Thanks! Yes, I saw the reference to _\@juanlopes_ in his article saying that 7EDD5E59A4E28C2 was your favorite number XD, excellent article btw, although it was kind of hard for me to read due to my poor Portuguese skills and some google translator issues. – higuaro Aug 24 '15 at 17:24

It depends on your definitions. First let's assume there are n bits, because if we assume there is a constant number of bits then everything we could possibly do with them is going to take constant time so we could not compare anything.

First, let's take the widest possible view of "bitwise operations" - they operate on bits but not necessarily pointwise, and furthermore we'll count operations but not include the complexity of the operations themselves.

M. L. Fredman and D. E. Willard showed that there is an algorithm of O(1) operations to compute lambda(x) (the floor of the base-2 logarithm of x, so the index of the highest set bit). It contains quite some multiplications though, so calling it bitwise is a bit funny.

On the other hand, there is an obvious O(log n) operations algorithm using no multiplications, just binary search for it. But can do better, Gerth Brodal showed that it can be done in O(log log n) operations (and none of them are multiplications).

All the algorithms I referenced are in The Art of Computer Programming 4A, bitwise tricks and techniques.

None of these really qualify as finding that 1 in constant time, and it should be obvious that you can't do that. The other answers don't qualify either, despite their claims. They're cool, but they're designed for a specific constant number of bits, any naive algorithm would therefore also be O(1) (trivially, because there is no n to depend on). In a comment OP said something that implied he actually wanted that, but it doesn't technically answer the question.

- 61,398

- 6

- 86

- 164

-

Standard bitwise operations scale linearly with the arbitrary number of bits for fixed word size (i.e. linear number of words) as do additions, subtractions, etc. but arbitrary multiplication is more like O(n log n) for arbitrary n bits with fixed word size. And even then it's pretty messy, using fast FFT etc to avoid O(n^2). So it would seem that for arbitrary n, the solution you alluded to that uses O(log log n) bit operations without multiplications is the way to go. Can you give a brief overview or a hint as to how that method works? And does it truly scale to #bits larger than word size? – user2566092 Aug 23 '15 at 17:54

-

@user2566092 it doesn't really matter, it's also the count of (unlimited width) bitwise operations for that one. If you go back to a RAM machine then none of this matters and the fastest way to find that 1 is to just scan for it linearly. – harold Aug 23 '15 at 18:05

-

If word size is considered variable (with fixed cost per bitwise word operation) then things become at least slightly more interesting than just the most basic "linear scan." E.g. for word size w and n words you could do O(n + log w), which is better than naive O(n + w). Or, using methods you've alluded to, even O(n + log log w) may be possible. I'll try to find this Brodal method you mentioned. Any brief insight you could give on it would be appreciated though, sometimes a bit of intuition from someone who already understands something can go a long way. =) – user2566092 Aug 23 '15 at 18:17

-

@user2566092 basically it computes the high bits of the result by throwing out some powers of two (this is like a binary search but over `lambda(x)`), then it abuses the width of the word to compute the rest in parallel (the word is now much wider than the value being worked with thanks to the first step, so it can fit it lots of times in the word for parallel work) – harold Aug 23 '15 at 18:50

-

@user2566092 the parallel part gets the other bits also sort of looks like a binary search but it doesn't need to branch or anything like that, every "lane" computes a bit of the result independently by using for that if `x & 0b01010101 == 0` then `lambda(x)` must be odd and so on – harold Aug 23 '15 at 19:06

-

Could you code the Gerth Brodal algo that produces answers using only bitwise operations for all `n < 21` in at most three operations? – גלעד ברקן Aug 23 '15 at 19:14

-

-

-

@גלעד ברקן it is, that just means it scales well asymptotically, it makes no promise about the exact number of operations – harold Aug 23 '15 at 19:22

-

But what use is an upper bound (of log log n) if it is not actually an upper bound? – גלעד ברקן Aug 23 '15 at 19:29

-

Let us [continue this discussion in chat](http://chat.stackoverflow.com/rooms/87713/discussion-between-harold-and--). – harold Aug 23 '15 at 19:30

The specifications of the problem are not entirely clear to me. For example, which operations count as "bit operations" and how many bits make up the input in question? Many processors have a "count leading zeros" or "find first bit" instruction exposed via intrinsic that basically provides the desired result directly.

Below I show how to find the bit position in 32-bit integer based on a De Bruijn sequence.

#include <stdio.h>

#include <stdlib.h>

#include <stdint.h>

/* find position of 1-bit in a = 2^n, 0 <= n <= 31 */

int bit_pos (uint32_t a)

{

static int tab[32] = { 0, 1, 2, 6, 3, 11, 7, 16,

4, 14, 12, 21, 8, 23, 17, 26,

31, 5, 10, 15, 13, 20, 22, 25,

30, 9, 19, 24, 29, 18, 28, 27};

// return tab [0x04653adf * a >> 27];

return tab [(a + (a << 1) + (a << 2) + (a << 3) + (a << 4) + (a << 6) +

(a << 7) + (a << 9) + (a << 11) + (a << 12) + (a << 13) +

(a << 16) + (a << 18) + (a << 21) + (a << 22) + (a << 26))

>> 27];

}

int main (void)

{

uint32_t nbr;

int pos = 0;

while (pos < 32) {

nbr = 1U << pos;

if (bit_pos (nbr) != pos) {

printf ("!!!! error: nbr=%08x bit_pos=%d pos=%d\n",

nbr, bit_pos(nbr), pos);

EXIT_FAILURE;

}

pos++;

}

return EXIT_SUCCESS;

}

- 23,970

- 4

- 78

- 130

-

1thanks njuffa for your effort. actually 20 bit is enough for my case. But does it show the position of all 2^i's upto i = 20? For some 2^i's, it shows 0 – Shadekur Rahman Aug 23 '15 at 10:46

-

@ShadekurRahman if 20 bits is enough, why are you even asking about theoretical results? Just go for the real-life fastest solution then (which is bitscan intrinsic). – harold Aug 23 '15 at 13:38

-

@ShadekurRahman Not sure what you mean. My answer supplies a test framework that tests `bit_pos()` with 2**i for i=0,..31. I compiled the code with two different compilers and the test passes. For which value do you see `bit_pos()` return an incorrect result? – njuffa Aug 23 '15 at 16:14

You can do it in O(1) if you allow a single memory access:

#include <iostream>

using namespace std;

int indexes[] = {

63, 0, 58, 1, 59, 47, 53, 2,

60, 39, 48, 27, 54, 33, 42, 3,

61, 51, 37, 40, 49, 18, 28, 20,

55, 30, 34, 11, 43, 14, 22, 4,

62, 57, 46, 52, 38, 26, 32, 41,

50, 36, 17, 19, 29, 10, 13, 21,

56, 45, 25, 31, 35, 16, 9, 12,

44, 24, 15, 8, 23, 7, 6, 5

};

int main() {

unsigned long long n;

while(cin >> n) {

cout << indexes[((n & (~n + 1)) * 0x07EDD5E59A4E28C2ull) >> 58] << endl;

}

}

- 10,143

- 2

- 25

- 44

-

It is quite fast. Can you tell me what's the underlying logic behind this? – Shadekur Rahman Aug 23 '15 at 11:22

-

1`n & (~n + 1)` is not needed, the question already promises there will only one bit set – harold Aug 23 '15 at 12:33

-

@ShadekurRahman I posted an answer in an attempt to explain the logic behind this awesome algorithm – higuaro Aug 24 '15 at 09:19

And the answer is ... ... ... ... ... yes!

Just for fun, since you commented below one of the answers that i up to 20 would suffice.

(multiplications here are by either zero or one)

#include <iostream>

using namespace std;

int f(int n){

return

0 | !(n ^ 1048576) * 20

| !(n ^ 524288) * 19

| !(n ^ 262144) * 18

| !(n ^ 131072) * 17

| !(n ^ 65536) * 16

| !(n ^ 32768) * 15

| !(n ^ 16384) * 14

| !(n ^ 8192) * 13

| !(n ^ 4096) * 12

| !(n ^ 2048) * 11

| !(n ^ 1024) * 10

| !(n ^ 512) * 9

| !(n ^ 256) * 8

| !(n ^ 128) * 7

| !(n ^ 64) * 6

| !(n ^ 32) * 5

| !(n ^ 16) * 4

| !(n ^ 8) * 3

| !(n ^ 4) * 2

| !(n ^ 2);

}

int main() {

for (int i=1; i<1048577; i <<= 1){

cout << f(i) << " "; // 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

}

}

- 23,602

- 3

- 25

- 61