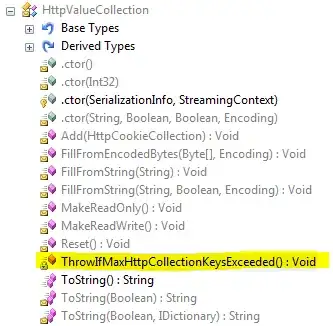

I am using the five point algorithm through the findEssentialMat() function in OpenCV 2.4.11 to compute the relative pose of one camera with respect to another. For initial tests, I kept two cameras separated in X and took multiple pictures. As the pictures show, the detected (and matched) features between the images remain mostly the same as there is no movement; yet the essential matrix and thereby the epipolar lines are varying a lot: which in turn is affecting my pose. To try to improve the accuracy of the E matrix, I also tried running the algorithm twice: first with RANSAC to filter out the outliers, and then the LMEDS algorithm, yet I cannot see a lot of improvement. Especially between pictures 1 and 2, there is a massive change.

Any pointers on what might be changing/going wrong? I am aware that RANSAC selection can cause different samples to be picked up every time, but as all the features should have the same "transformation" so to speak, shouldn't the epipolar lines still be similar no matter which ones are the final inliers? Additionally, the five point algorithm paper states that it does not have the issue of failure in cases where all points are coplanar. Is there any way I can improve the essential matrix computation?

Thanks for your time!