The reason for that is that you have many more 1's in your observations than 768's. So even if -1 is not exactly 1, it gets a high predicted value, because the histogram has a much larger larger value at 1 than at 768.

Up to a multiplicative constant, the formula for prediction is:

where K is your kernel, D your observations and h your bandwitdh. Looking at the doc for gaussian_kde, we see that if no value is provided for bw_method, it is estimated in some way, which here doesn't suit you.

So you can try some different values: the larger the bandwidth, the more points far from your new data are taken into account, the limit case being an almost constant predicted function.

On the other hand, a very small bandwidth only takes really close points into account, which is what I thing you want.

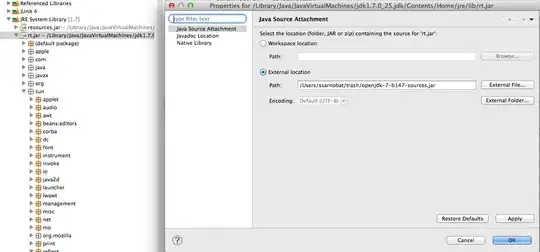

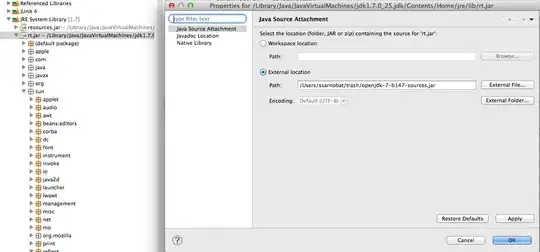

Some graphs to illustrate the influence of the bandwidth:

Code used:

import matplotlib.pyplot as plt

f, axarr = plt.subplots(2, 2, figsize=(10, 10))

for i, h in enumerate([0.01, 0.1, 1, 5]):

my_pdf = gaussian_kde(osservazioni, h)

axarr[i//2, i%2].plot(x, my_pdf(x), 'r') # distribution function

axarr[i//2, i%2].set_title("Bandwidth: {0}".format(h))

axarr[i//2, i%2].hist(osservazioni, normed=1, alpha=.3) # histogram

With your current code, for x=-1, the value of K((x-x_i)/h) for all x_i's who are equal to 1 is smaller than 1, but you add up a lot of these values (there are 921 1s in your observations, and also 357 2s)

On the other hand for x = 768, the value of the kernel is 1 for all x_i's which are 768, but there are not many such points (39 to be precise). So here a lot of "small" terms make a larger sum than a small number of larger terms.

If you don't want this behavior, you can decrease the size of your gaussian kernel : this way the penalty (K(-2)) paid because of the distance between -1 and 1 will be higher. But I think that this would be overfitting your observations.

A formula to determine whether a new sample is acceptable (compared to your empirical distribution) or not is more of a statistical problem, you can have a look at stats.stackexchange.com

You can always try to use a low value for the bandwidth, which will give you a peaked predicted function. Then you can normalize this function, dividing it by its maximal value.

After that, all predicted values will be between 0 and 1:

maxDensityValue = np.max(my_pdf(x))

for e in new_values:

print("{0} {1}".format(e, my_pdf(e)/maxDensityValue))