I want to reconstruct the position in view space from depth buffer texture. I've managed to set the depth buffer shader resource view into shader and I believe there's no problem with it.

I used this formula to calculate the pixel position in view space for each pixel on the screen:

Texture2D textures[4]; //color, normal (in view space), depth buffer (as texture), random

SamplerState ObjSamplerState;

cbuffer cbPerObject : register(b0) {

float4 notImportant;

float4 notImportant2;

float2 notImportant3;

float4x4 projectionInverted;

};

float3 getPosition(float2 textureCoordinates) {

//textures[2] stores the depth buffer

float depth = textures[2].Sample(ObjSamplerState, textureCoordinates).r;

float3 screenPos = float3(textureCoordinates.xy* float2(2, -2) - float2(1, -1), 1 - depth);

float4 wpos = mul(float4(screenPos, 1.0f), projectionInverted);

wpos.xyz /= wpos.w;

return wpos.xyz;

}

, but it gives me wrong result:

I calculate the inverted projection matrix this way on CPU and pass it to pixel shader:

ConstantBuffer2DStructure cbPerObj;

DirectX::XMFLOAT4X4 projection = camera->getProjection();

DirectX::XMMATRIX camProjection = XMLoadFloat4x4(&projection);

camProjection = XMMatrixTranspose(camProjection);

DirectX::XMVECTOR det; DirectX::XMMATRIX projectionInverted = XMMatrixInverse(&det, camProjection);

cbPerObj.projectionInverted = projectionInverted;

...

context->UpdateSubresource(constantBuffer, 0, NULL, &cbPerObj, 0, 0);

context->PSSetConstantBuffers(0, 1, &constantBuffer);

I know that this calculations for vertex shader was ok (so I guess that myCamera->getProjection() returns good result):

DirectX::XMFLOAT4X4 view = myCamera->getView();

DirectX::XMMATRIX camView = XMLoadFloat4x4(&view);

DirectX::XMFLOAT4X4 projection = myCamera->getProjection();

DirectX::XMMATRIX camProjection = XMLoadFloat4x4(&projection);

DirectX::XMMATRIX worldViewProjectionMatrix = objectWorldMatrix * camView * camProjection;

constantsPerObject.worldViewProjection = XMMatrixTranspose(worldViewProjectionMatrix);

constantsPerObject.world = XMMatrixTranspose(objectWorldMatrix);

constantsPerObject.view = XMMatrixTranspose(camView);

But maybe I've calculated the inverted projection matrix in a wrong way? Or did I make another mistake?

edit

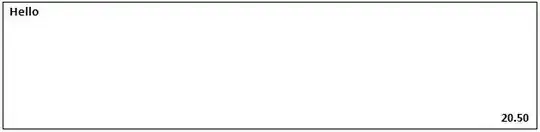

As @NicoSchertler spotted, the 1 - depth part in shader was an mistake. I've changed it to depth - 1 and made some minor changes in textures' format etc. I have such an result for now:

Note that this is for different camera's angle (as I don't have the earlier one anymore). Here's the reference - normals in view space:

It looks somehow better, but is it all right? It looks strange and not very smooth. It's a precision problem?

edit 2

As @NicoSchertler suggested the depth buffer in DirectX should use [0...1] range. So I've changed depth - 1 to depth to have:

float depth = textures[2].Sample(ObjSamplerState, textureCoordinates).r;

float3 screenPos = float3(textureCoordinates.xy* float2(2, -2) - float2(1, -1), depth);// -1); //<-- the change

float4 wpos = mul(float4(screenPos, 1.0f), projectionInverted);

wpos.xyz /= wpos.w;

return wpos.xyz;

But I got that result: