I've started hadoop cluster composed of on master and 4 slave nodes.

Configuration seems ok:

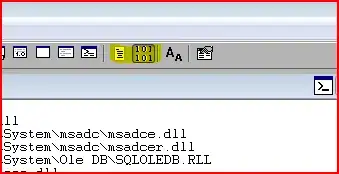

hduser@ubuntu-amd64:/usr/local/hadoop$ ./bin/hdfs dfsadmin -report

When I enter NameNode UI (http://10.20.0.140:50070/) Overview card seems ok - for example total Capacity of all Nodes sumes up.

The problem is that in the card Datanodes I see only one datanode.