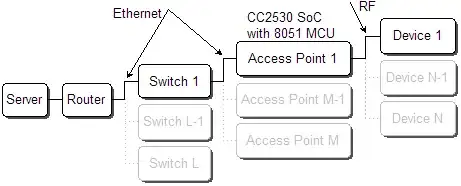

I'm working on the SSAO (Screen-Space Ambient Occlusion) algorithm using Oriented-Hemisphere rendering technique.

I) The algorithm

This algorithm requires as inputs:

- 1 array containing precomputed samples (loaded before the main loop -> In my example I use 64 samples oriented according to the z axis).

- 1 noise texture containing normalized rotation vectors also oriented according to the z axis (this texture is generated once).

- 2 textures from the GBuffer: the 'PositionSampler' and the 'NormalSampler' containing the positions and normal vectors in view space.

Here's the fragment shader source code I use:

#version 400

/*

** Output color value.

*/

layout (location = 0) out vec4 FragColor;

/*

** Vertex inputs.

*/

in VertexData_VS

{

vec2 TexCoords;

} VertexData_IN;

/*

** Inverse Projection Matrix.

*/

uniform mat4 ProjMatrix;

/*

** GBuffer samplers.

*/

uniform sampler2D PositionSampler;

uniform sampler2D NormalSampler;

/*

** Noise sampler.

*/

uniform sampler2D NoiseSampler;

/*

** Noise texture viewport.

*/

uniform vec2 NoiseTexOffset;

/*

** Ambient light intensity.

*/

uniform vec4 AmbientIntensity;

/*

** SSAO kernel + size.

*/

uniform vec3 SSAOKernel[64];

uniform uint SSAOKernelSize;

uniform float SSAORadius;

/*

** Computes Orientation matrix.

*/

mat3 GetOrientationMatrix(vec3 normal, vec3 rotation)

{

vec3 tangent = normalize(rotation - normal * dot(rotation, normal)); //Graham Schmidt process

vec3 bitangent = cross(normal, tangent);

return (mat3(tangent, bitangent, normal)); //Orientation according to the normal

}

/*

** Fragment shader entry point.

*/

void main(void)

{

float OcclusionFactor = 0.0f;

vec3 gNormal_CS = normalize(texture(

NormalSampler, VertexData_IN.TexCoords).xyz * 2.0f - 1.0f); //Normal vector in view space from GBuffer

vec3 rotationVec = normalize(texture(NoiseSampler,

VertexData_IN.TexCoords * NoiseTexOffset).xyz * 2.0f - 1.0f); //Rotation vector required for Graham Schmidt process

vec3 Origin_VS = texture(PositionSampler, VertexData_IN.TexCoords).xyz; //Origin vertex in view space from GBuffer

mat3 OrientMatrix = GetOrientationMatrix(gNormal_CS, rotationVec);

for (int idx = 0; idx < SSAOKernelSize; idx++) //For each sample (64 iterations)

{

vec4 Sample_VS = vec4(Origin_VS + OrientMatrix * SSAOKernel[idx], 1.0f); //Sample translated in view space

vec4 Sample_HS = ProjMatrix * Sample_VS; //Sample in homogeneus space

vec3 Sample_CS = Sample_HS.xyz /= Sample_HS.w; //Perspective dividing (clip space)

vec2 texOffset = Sample_CS.xy * 0.5f + 0.5f; //Recover sample texture coordinates

vec3 SampleDepth_VS = texture(PositionSampler, texOffset).xyz; //Sample depth in view space

if (Sample_VS.z < SampleDepth_VS.z)

if (length(Sample_VS.xyz - SampleDepth_VS) <= SSAORadius)

OcclusionFactor += 1.0f; //Occlusion accumulation

}

OcclusionFactor = 1.0f - (OcclusionFactor / float(SSAOKernelSize));

FragColor = vec4(OcclusionFactor);

FragColor *= AmbientIntensity;

}

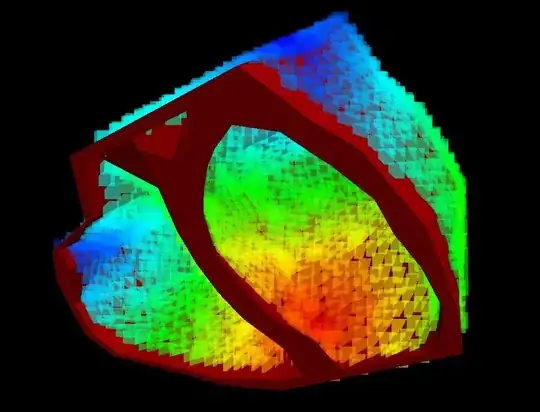

And here's the result (without blur render pass):

Until here all seems to be correct.

II) The performance

I noticed NSight Debugger a very strange behaviour concerning the performance:

If I move my camera closer and closer toward the dragon the performances are drastically impacted.

But, in my mind, it should be not the case because SSAO algorithm is apply in Screen-Space and do not depend on the number of primitives of the dragon for example.

Here's 3 screenshots with 3 different camera positions (with those 3 case all 1024*768 pixel shaders are executed using all the same algorithm):

a) GPU idle : 40% (pixel impacted: 100%)

b) GPU idle : 25% (pixel impacted: 100%)

c) GPU idle : 2%! (pixel impacted: 100%)

My rendering engine uses in my example exaclly 2 render passes:

- the Material Pass (filling the position and normal samplers)

- the Ambient pass (filling the SSAO texture)

I thought the problem comes from the addition of the execution of these two passes but it's not the case because I've added in my client code a condition to not compute for nothing the material pass if the camera is stationary. So when I took these 3 pictures above there was just the Ambient Pass executed. So this lack of performance in not related to the material pass. An other argument I could give you is if I remove the dragon mesh (the scene with just the plane) the result is the same: more my camera is close to the plane, more the lack of performance is huge!

For me this behaviour is not logical! Like I said above, in these 3 cases all the pixel shaders are executed applying exactly the same pixel shader code!

Now I noticed another strange behaviour if I change a little piece of code directly within the fragment shader:

If I replace the line:

FragColor = vec4(OcclusionFactor);

By the line:

FragColor = vec4(1.0f, 1.0f, 1.0f, 1.0f);

The lack of performance disappears!

It means that if the SSAO code is correctly executed (I tried to place some break points during the execution to check it) and I don't use this OcclusionFactor at the end to fill the final output color, so there is no lack of performance!

I think we can conclude that the problem does not come from the shader code before the line "FragColor = vec4(OcclusionFactor);"... I think.

How can yo explain a such behaviour?

I tried a lot of combination of code both in the client code and in the fragment shader code but I can't find the solution to this problem! I'm really lost.

Thank you very much in advance for your help!