You would think they would make public read the default behavior, wouldn't you? : )

I shared your frustration while building a custom API to interface with S3 from a C# solution. Here is the snippet that accomplishes uploading an S3 object and setting it to public-read access by default:

public void Put(string bucketName, string id, byte[] bytes, string contentType, S3ACLType acl) {

string uri = String.Format("https://{0}/{1}", BASE_SERVICE_URL, bucketName.ToLower());

DreamMessage msg = DreamMessage.Ok(MimeType.BINARY, bytes);

msg.Headers[DreamHeaders.CONTENT_TYPE] = contentType;

msg.Headers[DreamHeaders.EXPECT] = "100-continue";

msg.Headers[AWS_ACL_HEADER] = ToACLString(acl);

try {

Plug s3Client = Plug.New(uri).WithPreHandler(S3AuthenticationHeader);

s3Client.At(id).Put(msg);

} catch (Exception ex) {

throw new ApplicationException(String.Format("S3 upload error: {0}", ex.Message));

}

}

The ToACLString(acl) function returns public-read, BASE_SERVICE_URL is s3.amazonaws.com and the AWS_ACL_HEADER constant is x-amz-acl. The plug and DreamMessage stuff will likely look strange to you as we're using the Dream framework to streamline our http communications. Essentially we're doing an http PUT with the specified headers and a special header signature per aws specifications (see this page in the aws docs for examples of how to construct the authorization header).

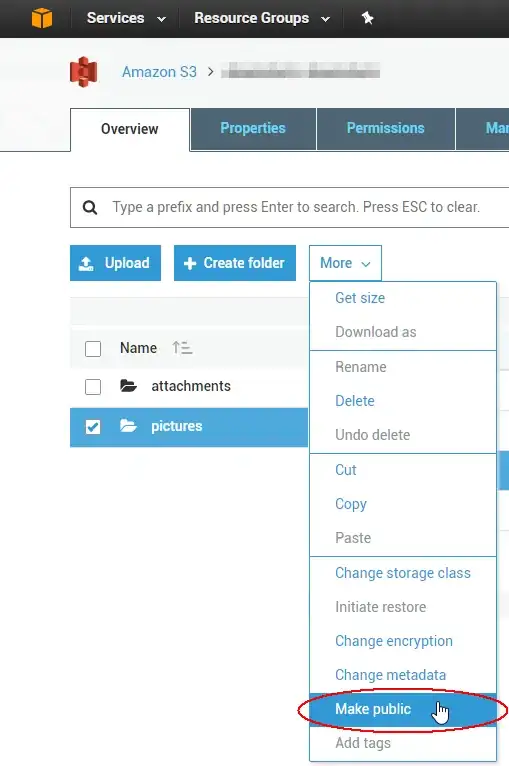

To change an existing 1000 object ACLs you could write a script but it's probably easier to use a GUI tool to fix the immediate issue. The best I've used so far is from a company called cloudberry for S3; it looks like they have a free 15 day trial for at least one of their products. I've just verified that it will allow you to select multiple objects at once and set their ACL to public through the context menu. Enjoy the cloud!