I’m a bit confused about how new MapReduce2 applications should be developed to work with YARN and what happen with the old ones.

I currently have MapReduce1 applications which basically consist in:

- Drivers which configure the jobs to be submitted to the cluster (previous JobTracker and now the ResourceManager).

- Mappers + Reducers

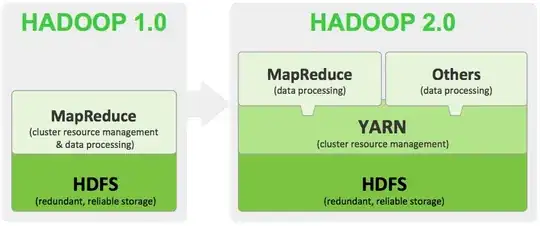

From one side I see that applications coded in MapReduce1 are compatible in MapReduce2 / YARN, with a few caveats, just recompiling with new CDH5 libraries (I work with Cloudera distribution).

But from other side I see information about writing YARN applications in a different way than MapReduce ones (using YarnClient, ApplicationMaster, etc):

http://hadoop.apache.org/docs/r2.7.0/hadoop-yarn/hadoop-yarn-site/WritingYarnApplications.html

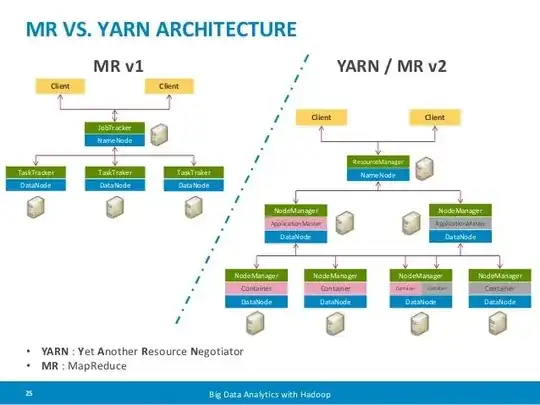

But for me, YARN is just the architecture and how the cluster manage your MR app.

My questions are:

- Are

YARNapplications includingMapReduceapplications? - Should I write my code like a

YARNapplication, forgetting drivers and creating Yarn clients,ApplicationMastersand so on? - Can I still develop the client classes with drivers + job settings?

Are

MapReduce1(recompiled with MR2 libraries) jobs managed byYARNin the same way that YARN applications? - What differences are between

MapReduce1applications andYARNapplications regarding the way in whichYARNwill manage them internally?

Thanks in advance