I had a quick go at this just from the command-line using ImageMagick. I am sure it could be improved upon by looking at the squareness of the detected blobs, but I don't have infinite time available and you said any ideas are welcome...

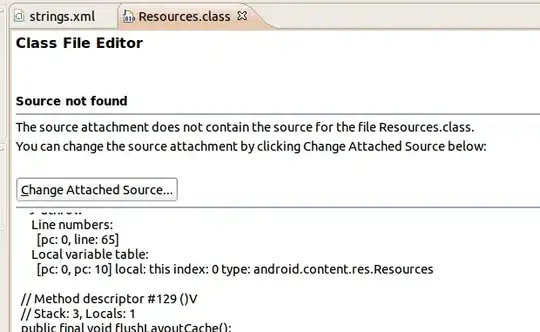

First, I thresholded the image, and then I replaced each pixel by the maximum pixel in the horizontal row looking 6 pixels left and right - this was to join the 2 halves of each of your coffee bean shapes together. The command is this:

convert https://i.stack.imgur.com/mr0OM.jpg -threshold 80% -statistic maximum 13x1 w.jpg

and it looks like this:

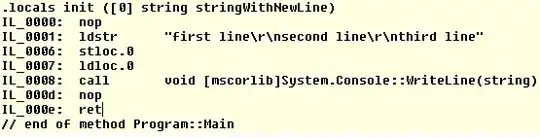

I then added on a Connected Components Analysis on it to find the blobs, like this:

convert https://i.stack.imgur.com/mr0OM.jpg \

-threshold 80% -statistic maximum 13x1 \

-define connected-components:verbose=true \

-define connected-components:area-threshold=500 \

-connected-components 8 -auto-level output.png

Objects (id: bounding-box centroid area mean-color):

0: 1280x1024+0+0 642.2,509.7 1270483 srgb(4,4,4)

151: 30x303+137+712 152.0,863.7 5669 srgb(255,255,255)

185: 29x124+410+852 421.2,913.2 2281 srgb(255,255,255)

43: 48x48+445+247 467.9,271.5 1742 srgb(255,255,255)

35: 21x94+234+214 243.7,259.2 1605 srgb(255,255,255)

10: 52x49+183+31 209.9,56.2 1601 srgb(255,255,255)

30: 31x86+504+176 523.1,217.2 1454 srgb(255,255,255)

171: 61x39+820+805 856.0,825.7 1294 srgb(255,255,255)

119: 20x78+1212+625 1221.6,664.3 1277 srgb(255,255,255)

17: 44x40+587+106 608.3,124.9 1267 srgb(255,255,255)

94: 19x70+1077+545 1086.1,580.6 1100 srgb(255,255,255)

59: 43x33+947+329 967.4,344.3 1092 srgb(255,255,255)

40: 39x32+735+235 754.4,251.0 1074 srgb(255,255,255)

91: 22x62+1258+540 1268.3,571.0 1045 srgb(255,255,255)

18: 23x50+197+124 207.1,148.1 996 srgb(255,255,255)

28: 40x28+956+165 976.8,177.7 970 srgb(255,255,255)

76: 22x55+865+467 875.6,493.8 955 srgb(255,255,255)

187: 18x59+236+858 244.4,886.4 928 srgb(255,255,255)

211: 46x27+720+997 743.8,1009.0 891 srgb(255,255,255)

206: 19x47+418+977 427.5,1000.5 804 srgb(255,255,255)

57: 21x44+231+313 241.4,335.5 769 srgb(255,255,255)

97: 20x45+1215+553 1224.3,574.3 766 srgb(255,255,255)

52: 19x47+516+293 525.4,316.2 752 srgb(255,255,255)

129: 20x41+18+645 28.2,665.1 746 srgb(255,255,255)

83: 21x45+1079+497 1088.1,518.9 746 srgb(255,255,255)

84: 17x44+636+514 644.0,535.7 704 srgb(255,255,255)

62: 19x43+514+348 523.3,369.3 704 srgb(255,255,255)

201: 19x42+233+951 242.3,971.8 675 srgb(255,255,255)

134: 21x39+875+659 884.3,676.9 667 srgb(255,255,255)

194: 25x32+498+910 509.5,924.6 625 srgb(255,255,255)

78: 19x38+459+483 467.8,501.8 622 srgb(255,255,255)

100: 20x37+21+572 30.6,589.4 615 srgb(255,255,255)

53: 18x37+702+296 710.5,314.5 588 srgb(255,255,255)

154: 18x37+1182+723 1191.2,741.3 566 srgb(255,255,255)

181: 47x18+808+842 827.6,850.4 565 srgb(255,255,255)

80: 19x33+525+486 534.2,501.9 544 srgb(255,255,255)

85: 17x34+611+517 618.9,533.4 527 srgb(255,255,255)

203: 21x31+51+960 60.5,974.6 508 srgb(255,255,255)

177: 19x30+692+827 700.7,841.5 503 srgb(255,255,255)

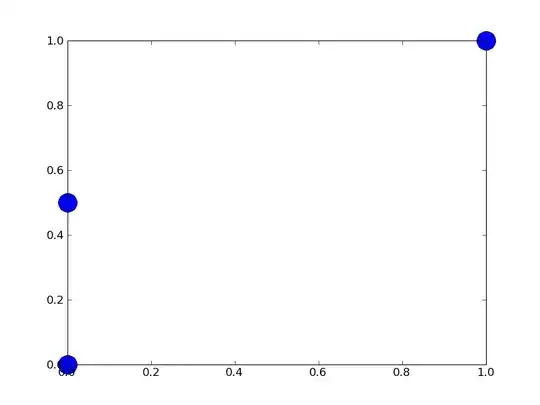

which shows me all the blobs it found, their boundaries and centroids. I then had ImageMagick draw the detected boxes onto your image as follows:

Just to explain the output, each line represents a blob. Let's look at the second line, which is:

151: 30x303+137+712 152.0,863.7 5669 srgb(255,255,255)

This means the blob is 30 pixels wide by 303 pixels tall and it is located 137 pixels from the left side of the image and 712 pixels down from the top. So it is basically the tallest green box at the bottom left of the image. 152,863 are the x,y coordinates of its centroid, its area is 5,669 pixels and its colour is white.

As I said, it can be improved upon, probably by looking at the ratios of the sides of the blobs to find squareness, but it may give you some ideas. By the way, can you say what the blobs are?