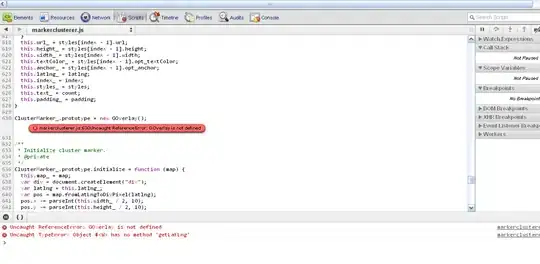

I'm trying to do a segmentation task on the below picture.

I'm using fuzzy c-means with some minimal pre-processing. The segmentation will have 3 classes: background(the blue region), meat(the red region) and fat(the white region). The background segmentation works perfectly. However meat-and-fat segmentation on the left side of the photo maps lots of meat tissues as fat. the final meat-mask is like this:

I suspect that's because of lighting conditions which makes the left side brighter so the algorithm classifies that region as fat-class. Also I think there could be some improvements if I could somehow make the surface smoother. I've used a 6x6 median filter which works alright, but I'm open to new suggestions. Any suggestions how to overcome this problem? May be some kind of smoothing? Thanks :)

Edit 1:

The fat areas are roughly marked in the below photo. The top area is ambiguous, but as rayryeng has mentioned in the comments, if it is ambiguous for me as a human, it's alright for the algorithm to misclassify it too. But the left hand section is clearly all meat and the algorithm assigns a big chunk of that as fat.