I was wondering if someone is able to explain to me how to interpret some G1 GC logs that lead up to an OutOfMemoryError?

I know that a heap dump is the best bet for finding out what is actually using the heap but I can't get that since it contains protected information that cannot leave the client site. All I have are the application logs (which include the stack from the OOME) and the G1 GC logs.

The full G1 GC logs have a lot of detail so I won't put them here unless someone specifically needs to see them.

The specific Java version that these came from was:

> java -version

java version "1.7.0_21"

Java(TM) SE Runtime Environment (build 1.7.0_21-b11)

Java HotSpot(TM) 64-Bit Server VM (build 23.21-b01, mixed mode)

And the GC options I am using to create the GC log are:

-XX:+PrintGCTimeStamps

-XX:+PrintGCDetails

-Xloggc:log/gc.log

Below are all the memory stats from each young and full GC during the last 30 minutes leading up to the OOME:

INFO | jvm 1 | 2015/05/28 04:29:34 | [Eden: 1290M(1290M)->0B(1290M) Survivors: 20M->20M Heap: 2445M(3932M)->1155M(3932M)]

INFO | jvm 1 | 2015/05/28 04:33:21 | [Eden: 1290M(1290M)->0B(1290M) Survivors: 20M->20M Heap: 2445M(3932M)->1155M(3932M)]

INFO | jvm 1 | 2015/05/28 04:37:09 | [Eden: 1290M(1290M)->0B(1290M) Survivors: 20M->20M Heap: 2445M(3932M)->1155M(3932M)]

INFO | jvm 1 | 2015/05/28 04:40:58 | [Eden: 1290M(1290M)->0B(1290M) Survivors: 20M->20M Heap: 2445M(3932M)->1155M(3932M)]

INFO | jvm 1 | 2015/05/28 04:44:44 | [Eden: 1290M(1290M)->0B(1290M) Survivors: 20M->20M Heap: 2445M(3932M)->1155M(3932M)]

INFO | jvm 1 | 2015/05/28 04:48:30 | [Eden: 1290M(1290M)->0B(1290M) Survivors: 20M->20M Heap: 2445M(3932M)->1155M(3932M)]

INFO | jvm 1 | 2015/05/28 04:52:17 | [Eden: 1290M(1290M)->0B(1290M) Survivors: 20M->20M Heap: 2445M(3932M)->1155M(3932M)]

INFO | jvm 1 | 2015/05/28 04:52:58 | [Eden: 639M(1290M)->0B(1295M) Survivors: 20M->15M Heap: 2278M(3932M)->1635M(3932M)]

INFO | jvm 1 | 2015/05/28 04:52:59 | [Eden: 51M(1295M)->0B(1300M) Survivors: 15M->10M Heap: 2561M(3932M)->2505M(3932M)]

INFO | jvm 1 | 2015/05/28 04:52:59 | [Full GC 2505M->1170M(3901M), 1.9469560 secs]

INFO | jvm 1 | 2015/05/28 04:53:01 | [Eden: 44M(1300M)->0B(1299M) Survivors: 0B->1024K Heap: 1653M(3901M)->1610M(3901M)]

INFO | jvm 1 | 2015/05/28 04:53:01 | [Eden: 1024K(1299M)->0B(1299M) Survivors: 1024K->1024K Heap: 1610M(3901M)->1610M(3901M)]

INFO | jvm 1 | 2015/05/28 04:53:02 | [Full GC 1610M->1158M(3891M), 1.4317370 secs]

INFO | jvm 1 | 2015/05/28 04:53:03 | [Eden: 112M(1299M)->0B(1296M) Survivors: 0B->1024K Heap: 1758M(3891M)->1647M(3891M)]

INFO | jvm 1 | 2015/05/28 04:53:06 | [Eden: 49M(1296M)->0B(1360M) Survivors: 1024K->1024K Heap: 2776M(4084M)->2728M(4084M)]

INFO | jvm 1 | 2015/05/28 04:53:06 | [Eden: 0B(1360M)->0B(1360M) Survivors: 1024K->1024K Heap: 2837M(4084M)->2836M(4084M)]

INFO | jvm 1 | 2015/05/28 04:53:06 | [Full GC 2836M->1158M(3891M), 1.4847750 secs]

INFO | jvm 1 | 2015/05/28 04:53:08 | [Full GC 1158M->1158M(3891M), 1.5313770 secs]

* This is a different format to the raw logs and I removed the timing details to make it shorter and easier to read.

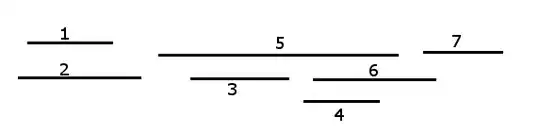

I also graphed the raw GC logs these in GCViewer:

It seems like everything was going ok so far:

- The used tenured was constant (the dark magenta line at the bottom of the graph).

- Young GC pauses occurred every couple of minutes cleaning up all the young objects (the grey line at the top of the graph).

- Allocated sizes for each generation were constant.

- Heap usage after the young GCs was around 1155M.

Then at 2015/05/28 04:52:59 things went pear shaped:

- The tenured all of a sudden started growing.

- When the young GC ran there were only 51M in the eden space.

- Full GCs started occurring.

- The first 3 full GCs seemed ok, they reduced the heap usage to 1158M-1170M (which is very close to the normal 1155M).

- The final full GC started with 1158M used and was still 1158M after.

The Memory tab in the screenshot shows:

Tenured heap (usage / alloc. max) 2,836 (104.1%) / 2,723M

Total promotion 2,048K

Now to explain briefly what occurred at 2015/05/28 04:52:59. At this point a whole bunch of configuration objects were serialised to a custom format using a StringBuilder. This resulted in a bunch of array copies which eventually resulted in the following exception at 2015/05/28 04:53:09:

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:2367)

at java.lang.AbstractStringBuilder.expandCapacity(AbstractStringBuilder.java:130)

at java.lang.AbstractStringBuilder.ensureCapacityInternal(AbstractStringBuilder.java:114)

at java.lang.AbstractStringBuilder.append(AbstractStringBuilder.java:587)

at java.lang.StringBuilder.append(StringBuilder.java:214)

...

There are a few things I can't explain:

- Where in the GC logs would you find the used tenured memory?

- What would cause such a significant spike in the used tenured memory to cause a GC? There were only 20M of survivors so wouldn't in the worst case these all go to tenured and no more?

- Could this perhaps be explained by a humongous object allocation?

- Why was the last full GC triggered when there was (apparently) so little used heap and at afterwards it cleaned up nothing?

- If there was 3891M heap allocated and only 1158M used then why would there be an OOME?