We have a problem which seems to be caused by the constant allocation and deallocation of memory:

We have a rather complex system here, where a USB device is measuring arbitrary points and sending the measurement data to the PC at a rate of 50k samples per second. These samples are then collected as MeasurementTasks in the software for each point and afterwards processed which causes even more needed memory because of the requirements of the calculations.

Simplified each MeasurementTask looks like the following:

public class MeasurementTask

{

public LinkedList<Sample> Samples { get; set; }

public ComplexSample[] ComplexSamples { get; set; }

public Complex Result { get; set; }

}

Where Sample looks like:

public class Sample

{

public ushort CommandIndex;

public double ValueChannel1;

public double ValueChannel2;

}

and ComplexSample like:

public class ComplexSample

{

public double Channel1Real;

public double Channel1Imag;

public double Channel2Real;

public double Channel2Imag;

}

In the calculation process the Samples are first calculated into a ComplexSample each and then futher processed until we get our Complex Result. After these calculations are done we release all the Sample and ComplexSample instances and the GC cleans them up soon after, but this results in a constant "up and down" of the memory usage.

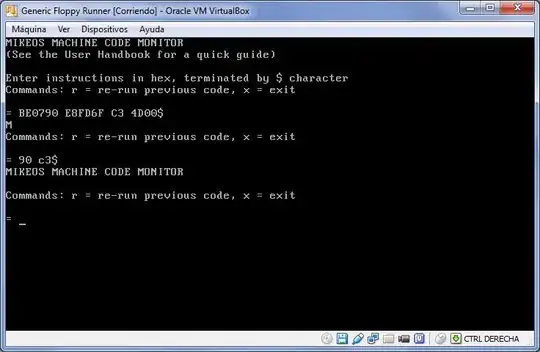

This is how it looks at the moment with each MeasurementTask containing ~300k samples:

Now we have sometimes the problem that the samples buffer in our HW device is overflown, as it can only store ~5000 samples (~100ms) and it seems the application is not always reading the device fast enough (we use BULK transfer with LibUSB/LibUSBDotNet). We tracked this problem down to this "memory up and down" by the following facts:

- the reading from the USB device happens in its own thread which runs at

ThreadPriority.Highest, so the calculations should not interfere - CPU usage is between 1-5% on my 8-core CPU => <50% of one core

- if we have (much) faster

MeasurementTasks with only a few hundret samples each, the memory goes only up and down very little and the buffer never overflows (but the amount of instances/second is the same, as the device still sends 50k samples/second) - we had a bug before, which did not release the

SampleandComplexSampleinstances after the calculations and so the memory only went up at ~2-3 MB/s and the buffer overflew all the time

At the moment (after fixing the bug mentioned above) we have a direct correlation between the samples count per point and the overflows. More samples/point = higher memory delta = more overflows.

Now to the actual question:

Can this behaviour be improved (easily)?

Maybe there is a way to tell the GC/runtime to not release the memory so there is no need to re-allocate?

We also thought of an alternative approach by "re-using" the LinkedList<Sample> and ComplexSample[]: Keep a pool of such lists/arrays and instead of releasing them put them back in the pool and "change" these instances instead of creating new ones, but we are not sure this is a good idea as it adds complexity to the whole system...

But we are open to other suggestions!

UPDATE:

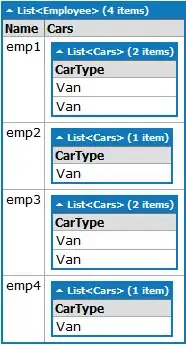

I now optimized the code base with the following improvements and did various test runs:

- converted

Sampleto astruct - got rid of the

LinkedList<Sample>and replaced them by straigt arrays (I actually had another one somewhere else I also removed) - several minor optimizations I found during analysis and optimization

- (optional - see below) converted

ComplexSampleto a struct

In any case it seems that the problem is gone now on my machine (long term tests and test on low-spec hardware will follow), but I first run a test with both types as struct and got the following memory usage graph:

There it still was going up to ~300 MB on a regular basis (but no overflow errors anymore), but as this still seemed odd to me I did some additional tests:

Side note: Each value of each ComplexSample is altered at least once during the calculations.

1) Add a GC.Collect after a task is processed and the samples are not referenced any more:

Now it was alternating between 140 MB and 150 MB (no noticable perfomance hit).

2) ComplexSample as a class (no GC.Collect):

Using a class it is much more "stable" at ~140-200 MB.

3) ComplexSample as a class and GC.Collect:

Now it is going "up and down" a little in the range of 135-150 MB.

Current solution:

As we are not sure this is a valid case for manually calling GC.Collect we are using "solution 2)" now and I will start running the long-term (= several hours) and low-spec hardware tests...