So i'm preforming multiple operations on the same rdd in a kafka stream. Is caching that RDD going to improve performance?

-

Another useful link: http://stackoverflow.com/a/37701793/2213164 – Stanislav May 08 '17 at 20:13

2 Answers

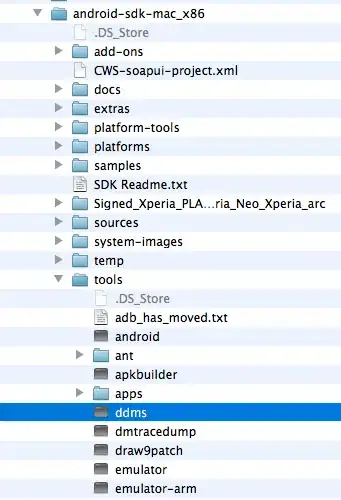

When running multiple operations on the same dstream, cache will substantially improve performance. This can be observed on the Spark UI:

Without the use of cache, each iteration on the dstream will take the same time, so the total time to process the data in each batch interval will be linear to the number of iterations on the data:

When cache is used, the first time the transformation pipeline on the RDD is executed, the RDD will be cached and every subsequent iteration on that RDD will only take a fraction of the time to execute.

(In this screenshot, the execution time of that same job was further reduced from 3s to 0.4s by reducing the number of partitions)

Instead of using dstream.cache I would recommend to use dstream.foreachRDD or dstream.transform to gain direct access to the underlying RDD and apply the persist operation. We use matching persist and unpersist around the iterative code to clean up memory as soon as possible:

dstream.foreachRDD{rdd =>

rdd.cache()

col.foreach{id => rdd.filter(elem => elem.id == id).map(...).saveAs...}

rdd.unpersist(true)

}

Otherwise, one needs to wait for the time configured on spark.cleaner.ttl to clear up the memory.

Note that the default value for spark.cleaner.ttl is infinite, which is not recommended for a production 24x7 Spark Streaming job.

- 37,100

- 11

- 88

- 115

-

2 questions: 1. is Kafa rdd really in memory or it's just the offset of the records which will only be retrieved upon actions. If this is the case, then that's why cache improve performance. 2. looks the dstream is not the original kafka rdd as the records has id attribute in your code. The original kafka rdd is (String, String) tuple. If this is the case, then cache will save the intermediate transformation, which will also improve the performance. The final improvement is probably from both – user3931226 Sep 10 '15 at 23:12

-

@user3931226 it would be better to turn that into a new question for the benefit of the whole community. – maasg Sep 11 '15 at 08:47

Spark also supports pulling data sets into a cluster-wide in-memory cache. This is very useful when data is accessed repeatedly, such as when querying a small “hot” dataset or when running an iterative algorithm like PageRank.

https://spark.apache.org/docs/latest/quick-start.html#caching

- 3,800

- 3

- 38

- 61

-

Yes but are there any benefits of doing this in a streaming job aka with dstreams that are already in memory? – ben jarman May 15 '15 at 07:34

-

caching is useful if the data in the DStream will be computed multiple times (e.g., multiple operations on the same data). For window-based operations like reduceByWindow and reduceByKeyAndWindow and state-based operations like updateStateByKey, this is implicitly true. – banjara May 15 '15 at 07:50