I have such x vectors and y vectors described in below :

x = [0 5 8 15 18 25 30 38 42 45 50];

y = [81.94 75.94 70.06 60.94 57.00 50.83 46.83 42.83 40.94 39.00 38.06];

with these values how can i find an coefficients of y = a*(b^x) ??

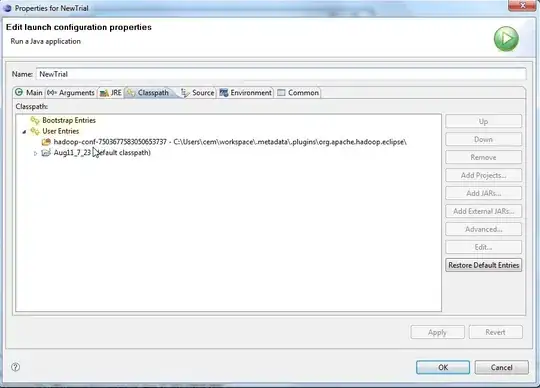

I've tried this code but it finds for y = a*e^(b*x)

clear, clc, close all, format compact, format long

% enter data

x = [0 5 8 15 18 25 30 38];

y = [81.94 75.94 70.06 60.94 57.00 50.83 46.83 42.83];

n = length(x);

y2 = log(y);

j = sum(x);

k = sum(y2);

l = sum(x.^2);

m = sum(y2.^2);

r2 = sum(x .* y2);

b = (n * r2 - k * j) / (n * l - j^2)

a = exp((k-b*j)/n)

y = a * exp(b * 35)

result_68 = log(68/a)/b

I know interpolation techniques but i couldn't implement it to my solutions...

Many thanks in advance!