Background:

I'm attempting to follow a tutorial in which I'm importing a CSV file that's approximately 324MB

to MongoLab's sandbox plan (capped at 500MB), via pymongo in Python 3.4.

The file holds ~ 770,000 records, and after inserting ~ 164,000 I hit my quota and received:

raise OperationFailure(error.get("errmsg"), error.get("code"), error)

OperationFailure: quota exceeded

Question:

Would it be accurate to say the JSON-like structure of NoSQL takes more space to hold the same data as a CSV file? Or am I doing something screwy here?

Further information:

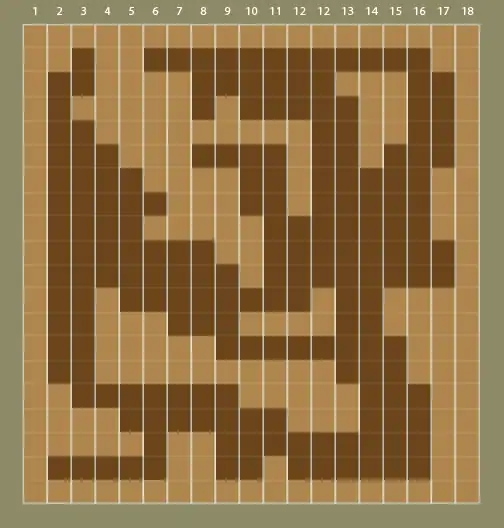

Here are the database metrics:

Here's the Python 3.4 code I used:

import sys

import pymongo

import csv

MONGODB_URI = '***credentials removed***'

def main(args):

client = pymongo.MongoClient(MONGODB_URI)

db = client.get_default_database()

projects = db['projects']

with open('opendata_projects.csv') as f:

records = csv.DictReader(f)

projects.insert(records)

client.close()

if __name__ == '__main__':

main(sys.argv[1:])