I have two tables : posts with 10k rows and comments and I need to select all comments for particular numbers of posts in other words implement the pagination by posts table and get all comments thereof. For that purpose I have the next query:

select * from comments c

inner join (select post_id from posts o order by post_id limit 0, 10) p

on c.post_id = p.post_id;

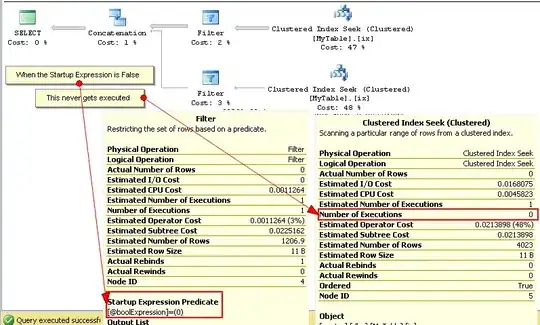

Also it is very important for me the performance of query. But the Explain of this query is very strange because LIMIT clause iterate through 9976 rows but not through 10 rows as I expect:

At the same time when I run subquery separately it works great with iterating through 10 rows as expected:

explain select post_id from posts o order by post_id limit 0, 10

Also there is indexes on posts(post_id), comments(comment_id), comments(post_id).

I don't understand what is the problem with that query so it iterate through all records in posts table. I will be very thankful if somebody help me with that issue.