I am trying to convert point clouds sampled and stored in XYZij data (which, according to the document, stores data in camera space) into a world coordinate system so that they can be merged. The frame pair I use for the Tango listener has COORDINATE_FRAME_START_OF_SERVICE as the base frame and COORDINATE_FRAME_DEVICE as the target frame.

This is the way I implement the transformation:

Retrieve the rotation quaternion from

TangoPoseData.getRotationAsFloats()asq_r, and the point position fromXYZijasp.Apply the following rotation, where

q_multis a helper method computing the Hamilton product of two quaternions (I have verified this method against another math library):p_transformed = q_mult(q_mult(q_r, p), q_r_conjugated);Add the translate retrieved from

TangoPoseData.getTranslationAsFloats()top_transformed.

But eventually, points at p_transformed always seem to end up in clutter of partly overlapped point clouds instead of an aligned, merged point cloud.

Am I missing anything here? Is there a conceptual mistake in the transformation?

Thanks in advance.

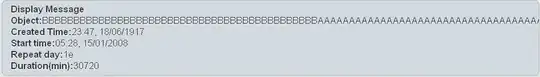

The real wall

The real wall