I've got Entity Framework 4.1 with .NET 4.5 running on ASP.NET in Windows 2008R2. I'm using EF code-first to connect to SQL Server 2008R2, and executing a fairly complex LINQ query, but resulting in just a Count().

I've reproduced the problem on two different web servers but only one database (production of course). It recently started happening with no application, database structure, or server changes on the web or database side.

My problem is that executing the query under certain circumstances takes a ridiculous amount of time (close to 4 minutes). I can take the actual query, pulled from SQL Profiler, and execute in SSMS in about 1 second. This is consistent and reproducible for me, but if I change the value of one of the parameters (a "Date after 2015-01-22" parameter) to something earlier, like 2015-01-01, or later like 2015-02-01, it works fine in EF. But I put it back to 2015-01-22 and it's slow again. I can repeat this over and over again.

I can then run a similar but unrelated query in EF, then come back to the original, and it runs fine this time - same exact query as before. But if I open a new browser, the cycle starts over again. That part also makes no sense - we're not doing anything to retain the data context in a user session, so I have no clue whatsoever why that comes into play.

But this all tells me that the data itself is fine.

In Profiler, when the query runs properly, it takes about a second or two, and shows about 2,000,000 in reads and about 2,000 in CPU. When it runs slowly, it takes 3.5 minutes, and the values are 300,000,000 and 200,000 - so reads are about 150 times higher and CPU is 100 times higher. Again, for the identical SQL statement.

Any suggestions on what EF might be doing differently that wouldn't show up in the query text? Is there some kind of hidden connection property which might cause a different execution plan in certain circumstances?

EDIT

The query that EF builds is one of the ones where it builds a giant string with the parameter included in the text, not as a SQL parameter:

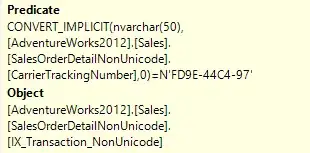

exec sp_executesql

N'SELECT [GroupBy1].[A1] AS [C1]

FROM (

SELECT COUNT(1) AS [A1]

...

AND ([Extent1].[Added_Time] >= convert(datetime2, ''2015-01-22 00:00:00.0000000'', 121))

...

) AS [GroupBy1]'

EDIT

I'm not adding this as an answer since it doesn't actually address the underlying issue, but this did end up getting resolved by rebuilding indexes and recomputing statistics. That hadn't been done in longer than usual, and it seems to have cleared up whatever caused the issue.

I'll keep reading up on some of the links here in case this happens again, but since it's all working now and unreproduceable, I don't know if I'll ever know for sure exactly what it was doing.

Thanks for all the ideas.