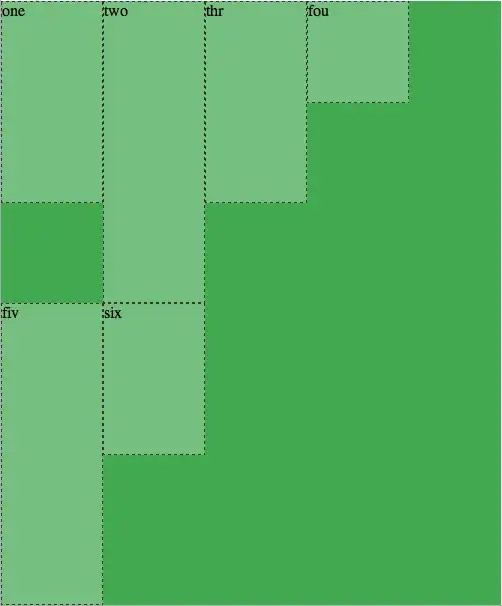

I have 303 data points in the train set (see the picture). Many of these points are equal to 0 on the Y axis.

Now I want to train the GBM model to predict the Y value. Here is my model:

train.subset<- data.frame(yval=train$yval,

hour=train$hour,

daymoment=train$daymoment,

year=train$year,

log.windspeed=log(train$windspeed+1),

weather=train$weather,

workingday=train$workingday,

log.temp=log(train$temp+1),

log.atemp=log(train$atemp+1),

log.humidity=log(train$humidity+1))

inTrain <- caret::createDataPartition(train.subset$registered,

p = .85, list = FALSE)

train.registered <- train.subset[inTrain, ]

cv.registered <- train.subset[-inTrain, ]

fitControl <- trainControl(## 5-fold CV

method = "repeatedcv",

number = 10,

## repeated ten times

repeats = 10)

gbmGrid <- expand.grid(interaction.depth = c(1, 5, 9),

n.trees = (5:25)*50,

shrinkage = 0.1)

fit.registered <- train(registered ~., data=train.registered, method = "gbm",trControl = fitControl,verbose = FALSE,tuneGrid = gbmGrid)

prediction.registered<-predict(fit.registered, newdata = cv.registered)

prediction.registered[prediction.registered<0] <- min(prediction.registered[prediction.registered > 0])

RMSE <- sqrt(mean((prediction.registered - cv.registered$registered)^2))

RMSE

Then I get quite high value of RMSE: ~28.

Here is the plot that shows both predicted and actual yval for the cross-validation set.

I don't understand why there is such a big error for this relatively simple curve. Any idea? Maybe I should try another package using the tuning parameters found by caret?

Just in case if this info is helpful:

> summary(fit.registered)

var rel.inf

hour hour 23.385420

log.atemp log.atemp 12.959972

daymoment.C daymoment.C 11.605700

log.humidity log.humidity 10.972162

log.windspeed log.windspeed 9.627754

daymoment.L daymoment.L 7.517074

daymoment^4 daymoment^4 4.658695

log.temp log.temp 4.567798

workingday workingday 4.135300

daymoment.Q daymoment.Q 3.766462

year year 3.763452

weather weather 3.040211

UPDATE: