I was wondering why my CouchDB database was growing to fast so I wrote a little test script. This script changes an attributed of a CouchDB document 1200 times and takes the size of the database after each change. After performing these 1200 writing steps the database is doing a compaction step and the db size is measured again. In the end the script plots the databases size against the revision numbers. The benchmarking is run twice:

- The first time the default number of document revision (=1000) is used (_revs_limit).

- The second time the number of document revisions is set to 1.

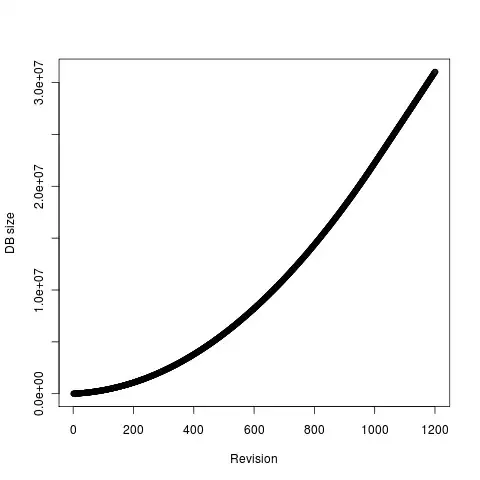

The first run produces the following plot

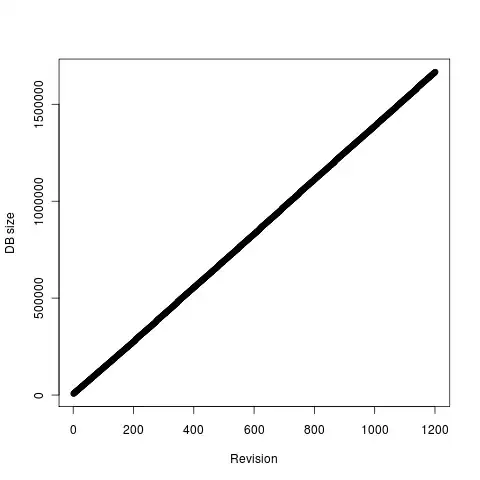

The second run produces this plot

For me this is quite an unexpected behavior. In the first run I would have expected a linear growth as every change produces a new revision. When the 1000 revisions are reached the size value should be constant as the older revisions are discarded. After the compaction the size should fall significantly.

In the second run the first revision should result in certain database size that is then keeps during the following writing steps as every new revision leads to the deletion of the previous one.

I could understand if there is a little bit of overhead needed to manage the changes but this growth behavior seems weird to me. Can anybody explain this phenomenon or correct my assumptions that lead to the wrong expectations?