Yes! you have to create all nodes with 3 children. Why 3? you can also have n-ary huffman coding using nodes with n child. The tree will look something like this-(for n=3)

*

/ | \

* * *

/|\

* * *

Huffman Algorithm for Ternary Codewords

I am giving the algorithms for easy reference.

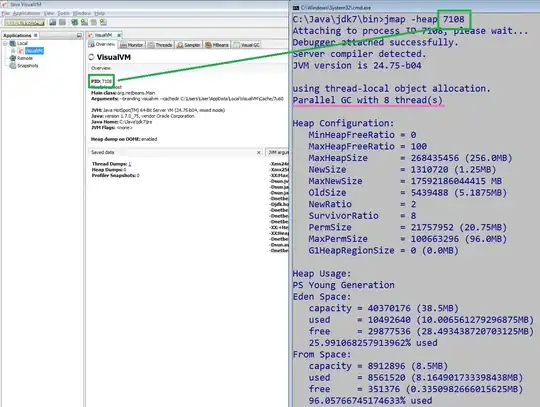

HUFFMAN_TERNARY(C)

{

IF |C|=EVEN

THEN ADD DUMMY CHARACTER Z WITH FREQUENCY 0.

N=|C|

Q=C; //WE ARE BASICALLY HEAPIFYING THE CHARACTERS

FOR I=1 TO floor(N/2)

{

ALLOCATE NEW_NODE;

LEFT[NEW_NODE]= U= EXTRACT_MIN(Q)

MID[NEW_NODE] = V= EXTRACT_MIN(Q)

RIGHT[NEW_NODE]=W= EXTRACT_MIN(Q)

F[NEW_NODE]=F[U]+F[V]+F[W];

INSERT(Q,NEW_NODE);

}

RETURN EXTRACT_MIN(Q);

} //END-OF-ALGO

Why are we adding extra nodes? To make the number of nodes odd.(Why?) Because we want to get out of the for loop with just one node in Q.

Why floor(N/2)?

At first we take 3 nodes. Then replace with it 1 node.There are N-2 nodes.

After that we always take 3 nodes (if not available 1 node,it is never possible to get 2 nodes because of the dummy node) and replace with 1. In each iteration we are reducing it by 2 nodes. So that's why we are using the term floor(N/2).

Check it yourself in paper using some sample character set. You will understand.

CORRECTNESS

I am taking here reference from "Introduction to Algorithms" by Cormen, Rivest.

Proof: The step by step mathematical proof is too long to post here but it is quite similar to the proof given in the book.

Idea

Any optimal tree has the lowest three frequencies at the lowest level.(We have to prove this).(using contradiction) Suppose it is not the case then we could switch a leaf with a higher frequency from the lowest level with one of the lowest three leaves and obtain a lower average length. Without any loss of generality, we can assume that all the three lowest frequencies are the children of the same node. if they are at the same level, the average length does not change irrespective of where the frequencies are). They only differ in the last digit of their codeword (one will be 0,1 or 2).

Again as the binary codewords we have to contract the three nodes and make a new character out of it having frequency=total of three node's(character's) frequencies. Like binary Huffman codes, we see that the cost of the optimal tree is the sum of the tree

with the three symbols contracted and the eliminated sub-tree which had the nodes before contraction. Since it has been proved that the sub-tree has

to be present in the final optimal tree, we can optimize on the tree with the contracted newly created node.

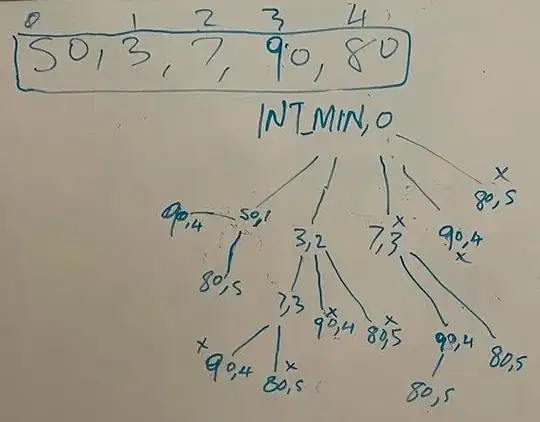

Example

Suppose the character set contains frequencies 5,9,12,13,16,45.

Now N=6-> even. So add dummy character with freq=0

N=7 now and freq in C are 0,5,9,12,13,16,45

Now using min priority queue get 3 values. 0 then 5 then 9.

Add them insert new char with freq=0+9+5 in priority queue. This way continue.

The tree will be like this

100

/ | \

/ | \

/ | \

39 16 45 step-3

/ | \

14 12 13 step-2

/ | \

0 5 9 step-1

Finally Prove it

I will now go to straight forward mimic of the proof of Cormen.

Lemma 1. Let C be an alphabet in which each character d belonging to C has frequency c.freq. Let

x ,y and z be three characters in C having the lowest frequencies. Then there exists

an optimal prefix code for C in which the codewords for x ,y and z have the same

length and differ only in the last bit.

Proof:

Idea

First consider any tree T generating arbitrary optimal prefix code.

Then we will modify it to make a tree representing another optimal prefix such that the characters x,y,z appears as sibling nodes at the maximum depth.

If we can construct such a tree then the codewords for x,y and z will have the same length and differ only in the last bit.

Proof--

- Let a,b,c be three characters that are sibling leaves of maximum depth in T .

- Without loss of generality, we assume that

a.freq < b:freq < c.freq and x.freq < y.freq < z.freq.

Since x.freq and y.freq and z.freq are the 3 lowest leaf frequencies, in order (means there are no frequencies between them) and a.freq

, b.freq and c.freq are two arbitrary frequencies, in order, we have x.freq < a:freq and

y.freq < b.freq and z.freq< c.freq.

In the remainder of the proof we can have x.freq=a.freq or y.freq=b.freq or z.freq=c.freq.

But if x.freq=b.freq or x.freq=c.freq

or y.freq=c.freq

then all of them are same. WHY??

Let's see. Suppose x!=y,y!=z,x!=z but z=c and x<y<z in order and aa<b<c.

Also x!=a. --> x<a

y!=b. --> y<b

z!=c. --> z<c but z=c is given. This contradicts our assumption. (Thus proves).

The lemma would be trivially true. Thus we will assume

x!=b and x!=c.

T1

* |

/ | \ |

* * x +---d(x)

/ | \ |

y * z +---d(y) or d(z)

/|\ |

a b c +---d(a) or d(b) or d(c) actually d(a)=d(b)=d(c)

T2

*

/ | \

* * a

/ | \

y * z

/|\

x b c

T3

*

/ | \

* * x

/ | \

b * z

/|\

x y c

T4

*

/ | \

* * a

/ | \

b * c

/|\

x y z

In case of T1 costt1= x.freq*d(x)+ cost_of_other_nodes + y.freq*d(y) + z.freq*d(z) + d(a)*a.freq + b.freq*d(b) + c.freq*d(c)

In case of T2 costt2= x.freq*d(a)+ cost_of_other_nodes + y.freq*d(y) + z.freq*d(z) + d(x)*a.freq + b.freq*d(b) + c.freq*d(c)

costt1-costt2= x.freq*[d(x)-d(a)]+0 + 0 + 0 + a.freq[d(a)-d(x)]+0 + 0

= (a.freq-x.freq)*(d(a)-d(x))

>= 0

So costt1>=costt2. --->(1)

Similarly we can show costt2 >= costt3--->(2)

And costt3 >= costt4--->(3)

From (1),(2) and (3) we get

costt1>=costt4.-->(4)

But T1 is optimal.

So costt1<=costt4 -->(5)

From (4) and (5) we get costt1=costt2.

SO, T4 is an optimal tree in which x,y,and z appears as sibling leaves at maximum depth, from which the lemma follows.

Lemma-2

Let C be a given alphabet with frequency c.freq defined for each character c belonging to C.

Let x , y, z be three characters in C with minimum frequency. Let C1 be the

alphabet C with the characters x and y removed and a new character z1 added,

so that C1 = C - {x,y,z} union {z1}. Define f for C1 as for C, except that

z1.freq=x.freq+y.freq+z.freq. Let T1 be any tree representing an optimal prefix code

for the alphabet C1. Then the tree T , obtained from T1 by replacing the leaf node

for z with an internal node having x , y and z as children, represents an optimal prefix

code for the alphabet C.

Proof.:

Look we are making a transition from T1-> T.

So we must find a way to express the T i.e, costt in terms of costt1.

* *

/ | \ / | \

* * * * * *

/ | \ / | \

* * * ----> * z1 *

/|\

x y z

For c belonging to (C-{x,y,z}), dT(c)=dT1(c). [depth corresponding to T and T1 tree]

Hence c.freq*dT(c)=c.freq*dT1(c).

Since dT(x)=dT(y)=dT(z)=dT1(z1)+1

So we have `x.freq*dT(x)+y.freq*dT(y)+z.freq*dT(z)=(x.freq+y.freq+z.freq)(dT1(z)+1)`

= `z1.freq*dT1(z1)+x.freq+y.freq+z.freq`

Adding both side the cost of other nodes which is same in both T and T1.

x.freq*dT(x)+y.freq*dT(y)+z.freq*dT(z)+cost_of_other_nodes= z1.freq*dT1(z1)+x.freq+y.freq+z.freq+cost_of_other_nodes

So costt=costt1+x.freq+y.freq+z.freq

or equivalently

costt1=costt-x.freq-y.freq-z.freq ---->(1)

Now we prove the lemma by contradiction.

We now prove the lemma by contradiction. Suppose that T does not represent

an optimal prefix code for C. Then there exists an optimal tree T2 such that

costt2 < costt. Without loss of generality (by Lemma 1), T2 has x and y and z as

siblings.

Let T3 be the tree T2 with the common parent of x and y and z replaced by a

leaf z1 with frequency z1.freq=x.freq+y.freq+z.freq Then

costt3 = costt2-x.freq-y.freq-z.freq

< costt-x.freq-y.freq-z.freq

= costt1 (From 1)

yielding a contradiction to the assumption that T1 represents an optimal prefix code

for C1. Thus, T must represent an optimal prefix code for the alphabet C.

-Proved.

Procedure HUFFMAN produces an optimal prefix code.

Proof: Immediate from Lemmas 1 and 2.

NOTE.: Terminologies are from Introduction to Algorithms 3rd edition Cormen Rivest