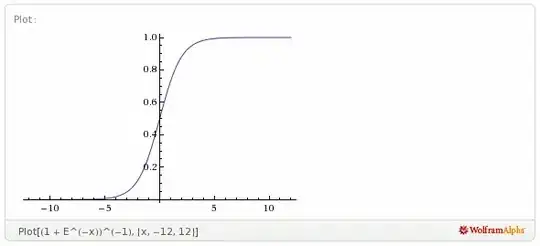

If you have a lot of nodes where the value of x is outside the -10..+10 box, you can just omit to calculate those values at all, e.g., like so ..

if( x < -10 )

y = 0;

else if( x > 10 )

y = 1;

else

y = 1 / (1 + Math.exp(-x));

return y;

Of course, this incurs the overhead of the conditional checks for EVERY calculation, so it's only worthwhile if you have lots of saturated nodes.

Another thing worth mentioning is, if you are using backpropagation, and you have to deal with the slope of the function, it's better to compute it in pieces rather than 'as written'.

I can't recall the slope at the moment, but here's what I'm talking about using a bipolar sigmoid as an example. Rather than compute this way

y = (1 - exp(-x)) / (1 + exp(-x));

which hits exp() twice, you can cache up the costly calculations in temporary variables, like so

temp = exp(-x);

y = (1 - temp) / (1 + temp);

There are lots of places to put this sort of thing to use in BP nets.