I am attempting to train a 2 hidden layer tanh neural neural network on the MNIST data set using the ADADELTA algorithm.

Here are the parameters of my setup:

- Tanh activation function

- 2 Hidden layers with 784 units (same as the number of input units)

- I am using softmax with cross entropy loss on the output layer

- I randomly initialized weights with a fanin of ~15, and gaussian distributed weights with standard deviation of 1/sqrt(15)

- I am using a minibatch size of 10 with 50% dropout.

- I am using the default parameters of ADADELTA (rho=0.95, epsilon=1e-6)

- I have checked my derivatives vs automatic differentiation

If I run ADADELTA, at first it makes gains in the error, and it I can see that the first layer is learning to identify the shapes of digits. It does a decent job of classifying the digits. However, when I run ADADELTA for a long time (30,000 iterations), it's clear that something is going wrong. While the objective function stops improving after a few hundred iterations (and the internal ADADELTA variables stop changing), the first layer weights still have the same sparse noise they were initialized with (despite real features being learned on top of that noise).

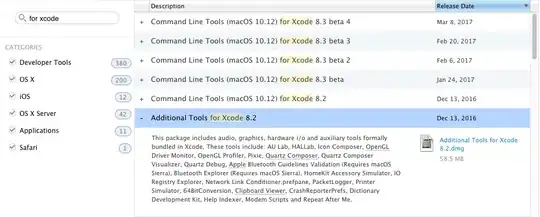

To illustrate what I mean, here is the example output from the visualization of the network.

Notice the pixel noise in the weights of the first layer, despite them having structure. This is the same noise that they were initialized with.

None of the training examples have discontinuous values like this noise, but for some reason the ADADELTA algorithm never reduces these outlier weights to be in line with their neighbors.

What is going on?