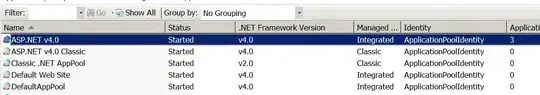

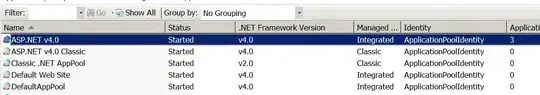

How to identify the linearly independent rows from a matrix? For instance,

The 4th rows is independent.

How to identify the linearly independent rows from a matrix? For instance,

The 4th rows is independent.

First, your 3rd row is linearly dependent with 1t and 2nd row. However, your 1st and 4th column are linearly dependent.

Two methods you could use:

Eigenvalue

If one eigenvalue of the matrix is zero, its corresponding eigenvector is linearly dependent. The documentation eig states the returned eigenvalues are repeated according to their multiplicity and not necessarily ordered. However, assuming the eigenvalues correspond to your row vectors, one method would be:

import numpy as np

matrix = np.array(

[

[0, 1 ,0 ,0],

[0, 0, 1, 0],

[0, 1, 1, 0],

[1, 0, 0, 1]

])

lambdas, V = np.linalg.eig(matrix.T)

# The linearly dependent row vectors

print matrix[lambdas == 0,:]

Cauchy-Schwarz inequality

To test linear dependence of vectors and figure out which ones, you could use the Cauchy-Schwarz inequality. Basically, if the inner product of the vectors is equal to the product of the norm of the vectors, the vectors are linearly dependent. Here is an example for the columns:

import numpy as np

matrix = np.array(

[

[0, 1 ,0 ,0],

[0, 0, 1, 0],

[0, 1, 1, 0],

[1, 0, 0, 1]

])

print np.linalg.det(matrix)

for i in range(matrix.shape[0]):

for j in range(matrix.shape[0]):

if i != j:

inner_product = np.inner(

matrix[:,i],

matrix[:,j]

)

norm_i = np.linalg.norm(matrix[:,i])

norm_j = np.linalg.norm(matrix[:,j])

print 'I: ', matrix[:,i]

print 'J: ', matrix[:,j]

print 'Prod: ', inner_product

print 'Norm i: ', norm_i

print 'Norm j: ', norm_j

if np.abs(inner_product - norm_j * norm_i) < 1E-5:

print 'Dependent'

else:

print 'Independent'

To test the rows is a similar approach.

Then you could extend this to test all combinations of vectors, but I imagine this solution scale badly with size.

With sympy you can find the linear independant rows using: sympy.Matrix.rref:

>>> import sympy

>>> import numpy as np

>>> mat = np.array([[0,1,0,0],[0,0,1,0],[0,1,1,0],[1,0,0,1]]) # your matrix

>>> _, inds = sympy.Matrix(mat).T.rref() # to check the rows you need to transpose!

>>> inds

[0, 1, 3]

Which basically tells you the rows 0, 1 and 3 are linear independant while row 2 isn't (it's a linear combination of row 0 and 1).

Then you could remove these rows with slicing:

>>> mat[inds]

array([[0, 1, 0, 0],

[0, 0, 1, 0],

[1, 0, 0, 1]])

This also works well for rectangular (not only for quadratic) matrices.

I edited the code for Cauchy-Schwartz inequality which scales better with dimension: the inputs are the matrix and its dimension, while the output is a new rectangular matrix which contains along its rows the linearly independent columns of the starting matrix. This works in the assumption that the first column in never null, but can be readily generalized in order to implement this case too. Another thing that I observed is that 1e-5 seems to be a "sloppy" threshold, since some particular pathologic vectors were found to be linearly dependent in that case: 1e-4 doesn't give me the same problems. I hope this could be of some help: it was pretty difficult for me to find a really working routine to extract li vectors, and so I'm willing to share mine. If you find some bug, please report them!!

from numpy import dot, zeros

from numpy.linalg import matrix_rank, norm

def find_li_vectors(dim, R):

r = matrix_rank(R)

index = zeros( r ) #this will save the positions of the li columns in the matrix

counter = 0

index[0] = 0 #without loss of generality we pick the first column as linearly independent

j = 0 #therefore the second index is simply 0

for i in range(R.shape[0]): #loop over the columns

if i != j: #if the two columns are not the same

inner_product = dot( R[:,i], R[:,j] ) #compute the scalar product

norm_i = norm(R[:,i]) #compute norms

norm_j = norm(R[:,j])

#inner product and the product of the norms are equal only if the two vectors are parallel

#therefore we are looking for the ones which exhibit a difference which is bigger than a threshold

if absolute(inner_product - norm_j * norm_i) > 1e-4:

counter += 1 #counter is incremented

index[counter] = i #index is saved

j = i #j is refreshed

#do not forget to refresh j: otherwise you would compute only the vectors li with the first column!!

R_independent = zeros((r, dim))

i = 0

#now save everything in a new matrix

while( i < r ):

R_independent[i,:] = R[index[i],:]

i += 1

return R_independent

I know this was asked a while ago, but here is a very simple (although probably inefficient) solution. Given an array, the following finds a set of linearly independent vectors by progressively adding a vector and testing if the rank has increased:

from numpy.linalg import matrix_rank

def LI_vecs(dim,M):

LI=[M[0]]

for i in range(dim):

tmp=[]

for r in LI:

tmp.append(r)

tmp.append(M[i]) #set tmp=LI+[M[i]]

if matrix_rank(tmp)>len(LI): #test if M[i] is linearly independent from all (row) vectors in LI

LI.append(M[i]) #note that matrix_rank does not need to take in a square matrix

return LI #return set of linearly independent (row) vectors

#Example

mat=[[1,2,3,4],[4,5,6,7],[5,7,9,11],[2,4,6,8]]

LI_vecs(4,mat)

I interpret the problem as finding rows that are linearly independent from other rows. That is equivalent to finding rows that are linearly dependent on other rows.

Gaussian elimination and treat numbers smaller than a threshold as zeros can do that. It is faster than finding eigenvalues of a matrix, testing all combinations of rows with Cauchy-Schwarz inequality, or singular value decomposition.

Problem with floating point numbers:

http://numpy-discussion.10968.n7.nabble.com/Reduced-row-echelon-form-td16486.html

With regards to the following discussion:

Find dependent rows/columns of a matrix using Matlab?

from sympy import *

A = Matrix([[1,1,1],[2,2,2],[1,7,5]])

print(A.nullspace())

It is obvious that the first and second row are multiplication of each other.

If we execute the above code we get [-1/3, -2/3, 1]. The indices of the zero elements in the null space show independence. But why is the third element here not zero? If we multiply the A matrix with the null space, we get a zero column vector. So what's wrong?

The answer which we are looking for is the null space of the transpose of A.

B = A.T

print(B.nullspace())

Now we get the [-2, 1, 0], which shows that the third row is independent.

Two important notes here:

Consider whether we want to check the row dependencies or the column dependencies.

Notice that the null space of a matrix is not equal to the null space of the transpose of that matrix unless it is symmetric.

You can basically find the vectors spanning the columnspace of the matrix by using SymPy library's columnspace() method of Matrix object. Automatically, they are the linearly independent columns of the matrix.

import sympy as sp

import numpy as np

M = sp.Matrix([[0, 1, 0, 0],

[0, 0, 1, 0],

[1, 0, 0, 1]])

for i in M.columnspace():

print(np.array(i))

print()

# The output is following.

# [[0]

# [0]

# [1]]

# [[1]

# [0]

# [0]]

# [[0]

# [1]

# [0]]

A (possibly) shorter solution:

def cartesian_product(vec1, vec2):

return np.transpose(np.array([np.repeat(vec1, len(vec2)), np.tile(vec2, len(vec1))]))

def check_dependencies(matrix_):

def rows_are_dependent(indices):

if indices[0] == indices[1]:

return False

return np.unique(matrix_[indices[0],] / matrix_[indices[1],]).shape[0] == 1

return np.any(np.apply_along_axis(rows_are_dependent, 1, cartesian_product(np.arange(matrix_.shape[0]), np.arange(matrix_.shape[0]))))

Simply, use rank to check linear dependency/ independency.

If the rank of the matrix is less than the dimension of the matrix (in a square matrix) or rank is less than the min(dim(matrix)) then the matrix is linearly dependent.

import numpy as np

matrix = np.array(

[

[0, 1 ,0 ,0],

[0, 0, 1, 0],

[0, 1, 1, 0],

[1, 0, 0, 1]

])

rank = np.linalg.matrix_rank(matrix)

#Check if the matrix is linearly independent

if rank == matrix.shape[0]:

print("The matrix is linearly independent.")

else:

print("The matrix is linearly dependent.")

matrix = np.array(

[

[0, 1 ,0 ,0],

[0, 0, 1, 0],

[0, 1, 1, 0]

])

rank = np.linalg.matrix_rank(matrix)

# Check if the matrix is linearly independent

if rank == min(matrix.shape):

print("The matrix is linearly independent.")

else:

print("The matrix is linearly dependent.")