The recursive implementation has an approximative time complexity of 2 square n (2^n) which means that the algorithm will have to go approximately through 64 computing steps to get the 6th Fibonacci number. This is huge and not optimal, it will take approximately the program 1073741824 computing steps to get the Fibonacci number of 30, a small number, which is unacceptable. Practically, the iterative approach is definitely way better and optimized.

After knowing the time complexity of this implementation, one may think that the space complexity of it may be the same and yet it is not. The space complexity of this implementation equals O(n), and it never exceeds it. So, let's demystify the "why"?.

This is because the function calls that are being executed recursively may seem, at first glance, being executed concurrently, but In reality, they are instead being executed sequentially.

Sequential execution guarantees that the stack size will never exceed the depth of the calls' tree illustrated above. The program starts by executing all the left-most calls before turning to the right and when an F0 or F1 call return, their corresponding stack frames get popped off.

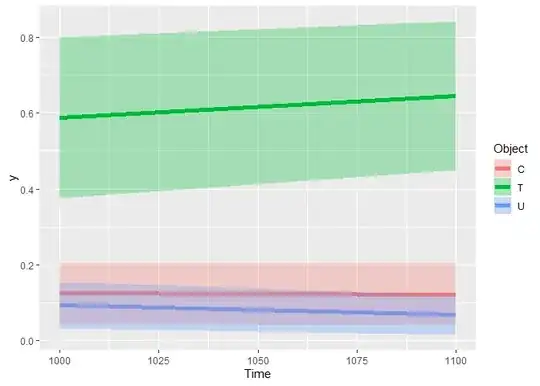

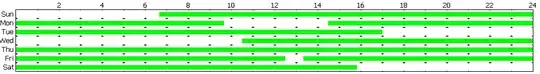

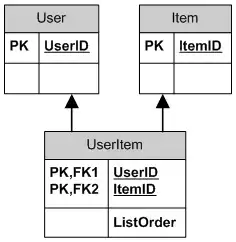

In the figure below, each rectangle represent a function call and the arrows represent the path taken by the program to reach the end of recursion:

What we can notice here is that when the program reaches the call F4F3F2F1 and returns 1, it goes back to its parent call F4F3F2 to execute the right recursive sub-call F4F3F2F0 and when both F4F3F2F0 and F4F3F2F1 calls return, the program returns to F4F3. So the stack never exceeds the size of the longest path F4F3F2F1.

The program will follow this same pattern of execution, going from parents to children and returning to parents after executing left and right calls until it reaches the last root parent of all F4, bringing the calculated sum of Fibonacci which 3.