1. CPU percentage load

I agree with @rth answer regards trying to use linux job priority / renice to increase CPU percentage - it's

- almost certain not to work

and, (as you've found)

- you're unlikely to be able to do it as you won't have super user priveliges on the nodes (It's pretty unlikely you can even log into the worker nodes - probably only the head node)

The CPU usage of your model as it runs is mainly a function of your code structure - if it runs at 100% CPU locally it will probably run like that on the node during the time its running.

Here are some answers to the more specific parts of your question:

2. CQLOAD

You ask

CQLOAD (what does it mean too?)

The docs for this are hard to find, but you link to the spec of your cluster, which tells us that the scheduling engine for it is Sun's *Grid Engine". Man pages are here (you can access them locally too - in particular typing man qstat)

If you search through for qstat -g c, you will see the outputs described. In particular, the second column (CQLOAD) is described as:

OUTPUT FORMATS

...

an average of the normalized load average of all queue

hosts. In order to reflect each hosts different signifi-

cance the number of configured slots is used as a weight-

ing factor when determining cluster queue load. Please

note that only hosts with a np_load_value are considered

for this value. When queue selection is applied only data

about selected queues is considered in this formula. If

the load value is not available at any of the hosts '-

NA-' is printed instead of the value from the complex

attribute definition.

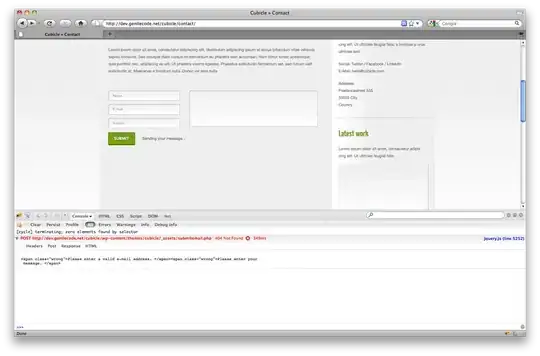

This means that CQLOAD gives an indication of how utilized the processors are in the queue. Your output screenshot above shows 0.84, so this indicator average load on (in-use) processors in all.q is 84%. This doesn't seem too low.

3. Number of nodes reserved

In a related question, you state colleagues are complaining that your processes are not using enough CPU. I'm not sure what that's based on, but I wonder the real problem here is that you're reserving a lot of nodes (even if just for a short time) for a job that they can see could work with fewer.

You might want to experiment with using fewer nodes (unless your results are very slow) - that is achieved by altering the line #$ -pe mpi 24 - maybe take the number 24 down. You can work out how many nodes you need (roughly) by timing how long 1 model run takes on your computer and then use

N = ((time to run 1 job) * number of runs in experiment) / (time you want the run to take)