I am writing a program to read some text files and write it to a JPEG file using libjpeg. When I set the quality to 100 (withjpeg_set_quality), there is actually no quality degradation in grayscale. However, when I move to RGB, even with a quality of 100, there seems to be compression.

When I give this input to convert to a grayscale JPEG image it works nicely and gives me a clean JPEG image:

0 0 0 0 0

0 0 0 0 0

0 0 0 0 0

0 255 0 0 0

255 0 0 0 0

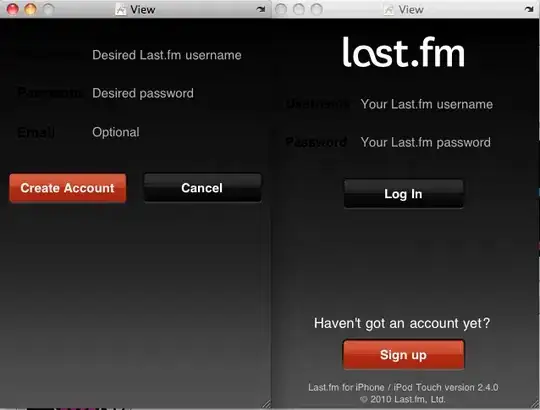

The (horizontally flipped) output is:

Now when I assume that array was the Red color, and use the following two arrays for the Green and Blue colors respectively:

0 0 0 0 0

0 0 0 0 0

0 0 255 0 0

0 0 0 0 0

0 0 0 0 0

0 0 0 0 255

0 0 0 255 0

0 0 0 0 0

0 0 0 0 0

0 0 0 0 0

This is the color output I get:

While only 5 input pixels have any color value, the surrouding pixels have also gotten a value when converted to color. For both the grayscale image and RGB image the quality was set to 100.

I wanted to see what is causing this and how I can fix it so the colors are also only used for the pixels that actually have an input value?