I have a matrix of size (61964, 25). Here is a sample:

array([[ 1., 0., 0., 4., 0., 1., 0., 0., 0., 0., 3.,

0., 2., 1., 0., 0., 3., 0., 3., 0., 14., 0.,

2., 0., 4.],

[ 0., 0., 0., 1., 2., 0., 0., 0., 0., 0., 1.,

0., 2., 0., 0., 0., 0., 0., 0., 0., 5., 0.,

0., 0., 1.]])

Scikit-learn provides a useful function provided that our data are normally distributed:

from sklearn import preprocessing

X_2 = preprocessing.scale(X[:, :3])

My problem, however, is that I have to work on a row basis - which does not consist of 25 observations only - and so the normal distribution is not applicable here. The solution is to use t-distribution but how can I do that in Python?

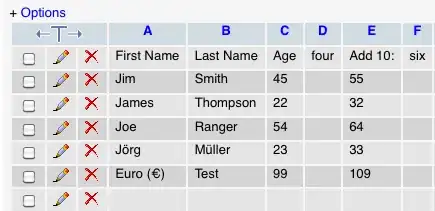

Normally, values go from 0 to, say, 20. When I see unusually high numbers, I filter out the whole row. The following histogram shows what my actual distribution looks like: