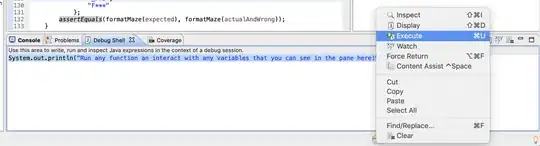

I'm using Core Image for face detection like this:

CIImage* image = [CIImage imageWithCGImage:aImage.CGImage];

//create Facedetector

NSDictionary *opts = [NSDictionary dictionaryWithObject:CIDetectorAccuracyHigh

forKey:CIDetectorAccuracy];

CIDetector* detector = [CIDetector detectorOfType:CIDetectorTypeFace

context:nil

options:opts];

//Pull out the features of the face and loop through them

NSArray* features = [detector featuresInImage:image];

But I found that when I use a picture with completely face, it could detect the face correctly, but when I use a incompletely face, it failed , just as the following snapshot:

What's wrong with my code, does it that the CIDetector only works well with the completely face?

[update] here is my code, I could only detect the left