I'm trying to programmatically delete all the rows in a Socrata dataset, using the SODA API. I don't want to delete the dataset itself, because then I'd have lots of work to reconstruct it. It's also very slow to do this for thousands of rows.

Previously, I iterated slowly over each row, deleting each, until someone on Twitter, suggested doing an Upsert with no rows. I believe that I implemented this and it worked, but now it doesn't.

Here's the relevant bit of code:

headers = {'X-App-Token': config.app_token}

headers['Content-Type'] = 'application/json'

r = requests.put(config.dataset + '.json', data='[ ]', headers=headers, auth=config.auth)

print r.status_code, r.text

The output is:

200 {

"By RowIdentifier" : 0,

"Rows Updated" : 0,

"Rows Deleted" : 0,

"Rows Created" : 0,

"Errors" : 0,

"By SID" : 0

}

(So I think it's safe to say that the problem is not to do with authentication, authorisation, etc? My other functions to upsert rows work fine, so it's also not a problem with a wrong dataset URL or anything.)

I also query the number of rows before and afterwards, and there is no change. Still thousands of rows.

As far as I can tell, I'm following the API document for Replacing rows in bulk.

The only thing I can think of is that due to a bug, I have multiple rows with the same rowid.

EDIT

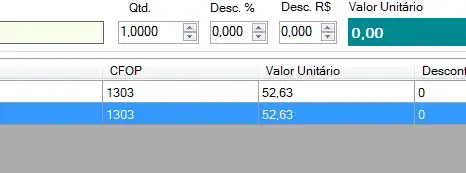

Here are some duplicate row identifiers:

rowid is definitely set as a row identifier:

Now given that row identifiers are supposed to "essentially act the same way as primary keys", I'm starting to wonder if this is a bug, or something has gone horribly wrong? The publishing code looks like this:

def publishDataset(rows):

r = requests.post(config.dataset, data=simplejson.dumps(rows), headers = headers, auth=config.auth)

j = r.json()

print

if r.status_code != 200:

raise RuntimeError ( "%d Socrata error: %s" % (r.status_code, j['message']))

return j

Full code here: https://github.com/stevage/meshlium-soda