I'd appreciate it if someone more experienced on implementation would help me to spot my logical flaw in my current code. For the past couple of hours I've been stuck with the implementation and testing of various step sizes for the following RK4 function to solve the Lotka-Volterra Differential equation.

I did my absolute best to ensure readability of the code and comment out crucial steps, so the code below should be clear.

import matplotlib.pyplot as plt

import numpy as np

def model(state,t):

"""

A function that creates an 1x2-array containing the Lotka Volterra Differential equation

Parameter assignement/convention:

a natural growth rate of the preys

b chance of being eaten by a predator

c dying rate of the predators per week

d chance of catching a prey

"""

x,y = state # will corresponding to initial conditions

# consider it as a vector too

a = 0.08

b = 0.002

c = 0.2

d = 0.0004

return np.array([ x*(a-b*y) , -y*(c - d*x) ]) # corresponds to [dx/dt, dy/dt]

def rk4( f, x0, t):

"""

4th order Runge-Kutta method implementation to solve x' = f(x,t) with x(t[0]) = x0.

INPUT:

f - function of x and t equal to dx/dt.

x0 - the initial condition(s).

Specifies the value of x @ t = t[0] (initial).

Can be a scalar or a vector (NumPy Array)

Example: [x0, y0] = [500, 20]

t - a time vector (array) at which the values of the solution are computed at.

t[0] is considered as the initial time point

the step size h is dependent on the time vector, choosing more points will

result in a smaller step size.

OUTPUT:

x - An array containing the solution evaluated at each point in the t array.

"""

n = len( t )

x = np.array( [ x0 ] * n ) # creating an array of length n

for i in xrange( n - 1 ):

h = t[i+1]- t[i] # step size, dependent on time vector

# starting below - the implementation of the RK4 algorithm:

# for further informations visit http://en.wikipedia.org/wiki/Runge-Kutta_methods

# k1 is the increment based on the slope at the beginning of the interval (same as Euler)

# k2 is the increment based on the slope at the midpoint of the interval

# k3 is AGAIN the increment based on the slope at the midpoint

# k4 is the increment based on the slope at the end of the interval

k1 = f( x[i], t[i] )

k2 = f( x[i] + 0.5 * h * k1, t[i] + 0.5 * h )

k3 = f( x[i] + 0.5 * h * k2, t[i] + 0.5 * h )

k4 = f( x[i] + h * k3, t[i] + h )

# finally computing the weighted average and storing it in the x-array

t[i+1] = t[i] + h

x[i+1] = x[i] + h * ( ( k1 + 2.0 * ( k2 + k3 ) + k4 ) / 6.0 )

return x

################################################################

# just the graphical output

# initial conditions for the system

x0 = 500

y0 = 20

# vector of times

t = np.linspace( 0, 200, 150 )

result = rk4( model,[x0,y0], t )

plt.plot(t,result)

plt.xlabel('Time')

plt.ylabel('Population Size')

plt.legend(('x (prey)','y (predator)'))

plt.title('Lotka-Volterra Model')

plt.show()

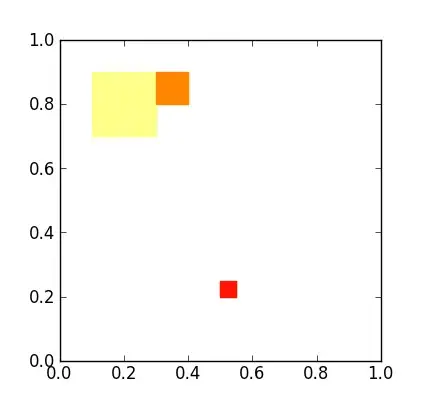

The current output looks 'okay-ish' on a small interval and then goes 'berserk'. Oddly enough the code seems to perform better when I choose a larger step size rather than a small one, which points out that my implementation must be wrong, or maybe my model is off. I couldn't spot the error myself.

Output (wrong):

and this is the desired output which can be easily obtained by using one of Scipys integration modules. Note that on the time interval [0,50] the simulation seems correct, then it gets worse by every step.