I am looking into the effect of the training sample size when doing a ridge (regularised) regression.

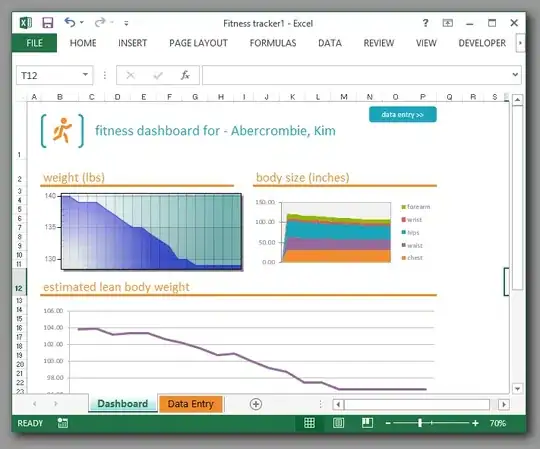

I get this very strange graph when I plot the test error versus the train set size:  .

.

The following code generates a training set and a test set and performs ridge regression for a low value of the regularization parameter.

The error and its standard deviation is plotted against the size of the training set.

Note that the dimension of the generated data is 10.

%settings

samplerange = 8:12;

maxiter = 100;

test = 300;

dimension = 10;

gamma = 10^-5;

rng(2);

figure(1);

error = zeros(maxiter,1);

for samples=samplerange

for iter=1:maxiter

% training data

a = randn(dimension,1);

xtrain = randn(samples,dimension);

ytrain = xtrain*a + randn(samples,1);

% test data

xtest = randn(test,dimension);

ytest = xtest*a + randn(test,1);

% ridge regression

afit = (xtrain'*xtrain+gamma*length(ytrain)*eye(dimension)) \ xtrain'*ytrain;

% test error

error(iter) = (ytest-xtest*afit)'*(ytest-xtest*afit) / length(ytest);

end

hold on;

errorbar(samples, mean(error), std(error), '.');

hold off;

end

mean(error)

I get the following error values:

14.0982

28.1679

201.4467

75.4921

16.2038

and the following standard deviation:

39.3148

126.0627

756.4289

568.7223

65.9008

Why is it going up then down? The value is averaged over 100 iterations so this isn't by chance.

I believe it has something to do with the fact that the dimension of the data is 10. It may be computational since the test error should of course decrease as the training set gets bigger...

If any of you can shine a light on what is going on, I'd be grateful!