All,

Having reviewed StackOverflow and the wider internet, I am still struggling to efficiently calculate Percentiles using LINQ.

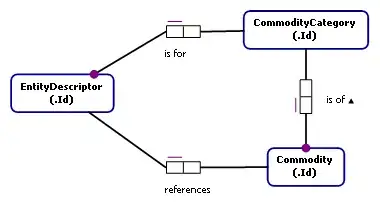

Where a percentile is a measure used in statistics indicating the value below which a given percentage of observations in a group of observations fall. The below example attempts to convert a list of values, to an array where each (unique) value is represented with is associated percentile. The min() and max() of the list are necessarily the 0% and 100% of the returned array percentiles.

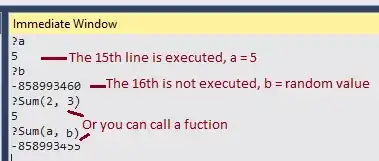

Using LINQPad, the below code generates the required output a VP[]:

This can be interpreted as: - At 0% the minimum value is 1 - At 100% the maximum value is 3 - At 50% between the minimum and maximum the value is 2

void Main()

{

var list = new List<double> {1,2,3};

double denominator = list.Count - 1;

var answer = list.Select(x => new VP

{

Value = x,

Percentile = list.Count(y => x > y) / denominator

})

//.GroupBy(grp => grp.Value) --> commented out until attempted duplicate solution

.ToArray();

answer.Dump();

}

public struct VP

{

public double Value;

public double Percentile;

}

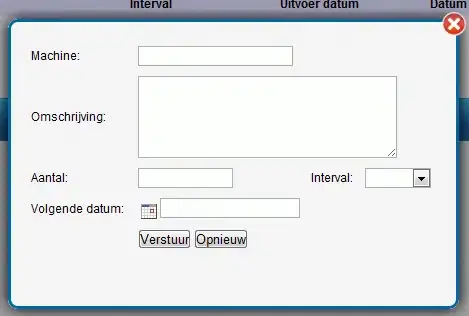

However, this returns an incorrect VP[] when the "list" contains duplicate entries (e.g. 1,2,**2,**3) :

My attempts to group by unique values in the list (by including ".GroupBy(grp => grp.Value)") have failed to yield the desired result (Value =2, & Percentile = 0.666) :

All suggestions are welcome. Including whether this is an efficient approach given the repeated iteration with "list.Count(y => x > y)".

As always, thanks Shannon